MikroKopter - FollowMe on the Wakeboard from Holger Buss on Vimeo.

The follow me concept using GPS is nothing new. It was demonstrated in 2008 at long range, with the normal lens cameras of the time.

Now, they're doing it at slightly closer ranges, with modern wide angle, stabilized cameras. It has always depended on a very accurate attitude estimation with GPS coordinate triangulation.

The athlete still looks like a tiny dot. The newest videos are a lot more edited for when the athlete goes out of frame. It has the feel of trying to get a lot more mileage out of the same old capability.

Getting a closeup is really hard. The easiest way is to have a very long lens camera with many levels of stabilization & chroma key detection of the athlete. The last of the Sony Handycams had excellent stabilization. So far, they're all marketing gopro cameras, so this method is not being demonstrated any time soon.

The HDR-PJ540 is the current optically stabilized one. It's quite large & expensive. Stabilization on that level is now a novelty feature, since no-one cares. It's a huge investment, just for the follow me mode.

Another way is to fly up close. The advantage is a much easier time initializing it, much smaller vehicle, getting the athlete started in frame, & ability to fly in confined spaces.

GPS is no good. The athlete has to be the navigation reference. Time of flight cameras & structured light cameras don't work in daylight. The movie camera alone can resolve position, within strict limits. It would take having the athlete wear multiple chroma key markers & having 2 markers in frame at all time. The markers either have to be a constant distance apart or they need a way to tell how far apart they are, maybe by some electromagnetic sensor which has been demonstrated. Single camera autopilots have been done before.

It still would need GPS at long range. Any method using chroma keying without a GPS aid is going to be prone to false positives & flying away.

The bottleneck in making a viable follow cam is a super high resolution camera transferring realtime video to a really small computer where it's scanned for small, finely detailed markers. Interfacing HDMI has become the requirement for getting any kind of realtime video. You can probably get it into the raspberry pi's CSI bus. The CSI bus is a parallel bus of differential pairs. The pi isn't fast enough to do anything with it.

The only way to do the job is an FPGA implementation of the marker tracker, doing the full SDRAM, HDMI injestion, & marker detection in hardware. For all the hype about software, OpenCV, & running Pixhawk on Linux, hardware is still the only way to get anywhere near realizing the promises the UAV industry is making.

It's surprising no-one is focusing on hardware implementations of higher end object detection. There is a slow increase in hobbyist attention to FPGA's, but only for controlling LEDs, software radio, or very limited chroma keying from a low end VGA cam.

For all the different software platforms they're trying to get Pixhawk to run on, they're not going to get the horsepower that real image processing requires.

Searching far & wide for a marker system which might enable a follow cam revealed this:

Previous experience showed the classic international pink was the best chroma keying material & a doughnut was the easiest shape for current cameras to isolate. When chroma keying is combined with a unique shape, it gives 2 layers of redundancy.

A 4k camera detecting a full body, international pink, digital camouflage suit would be ideal.

Next would be digital camouflage markers, but given the reality of only having enough money for 640x480, we have only

Where to place the markers so a single camera can detect range is the next problem.

It's become quite clear that retroreflective marker is the new fashion statement. There are ongoing, unreliable attempts to automatically isolate a human body from an image, but making the world easy for robots to navigate, rather than making the robots better at navigating the world is the theme. The markers are going to become normal attire before the autonomous camera works.

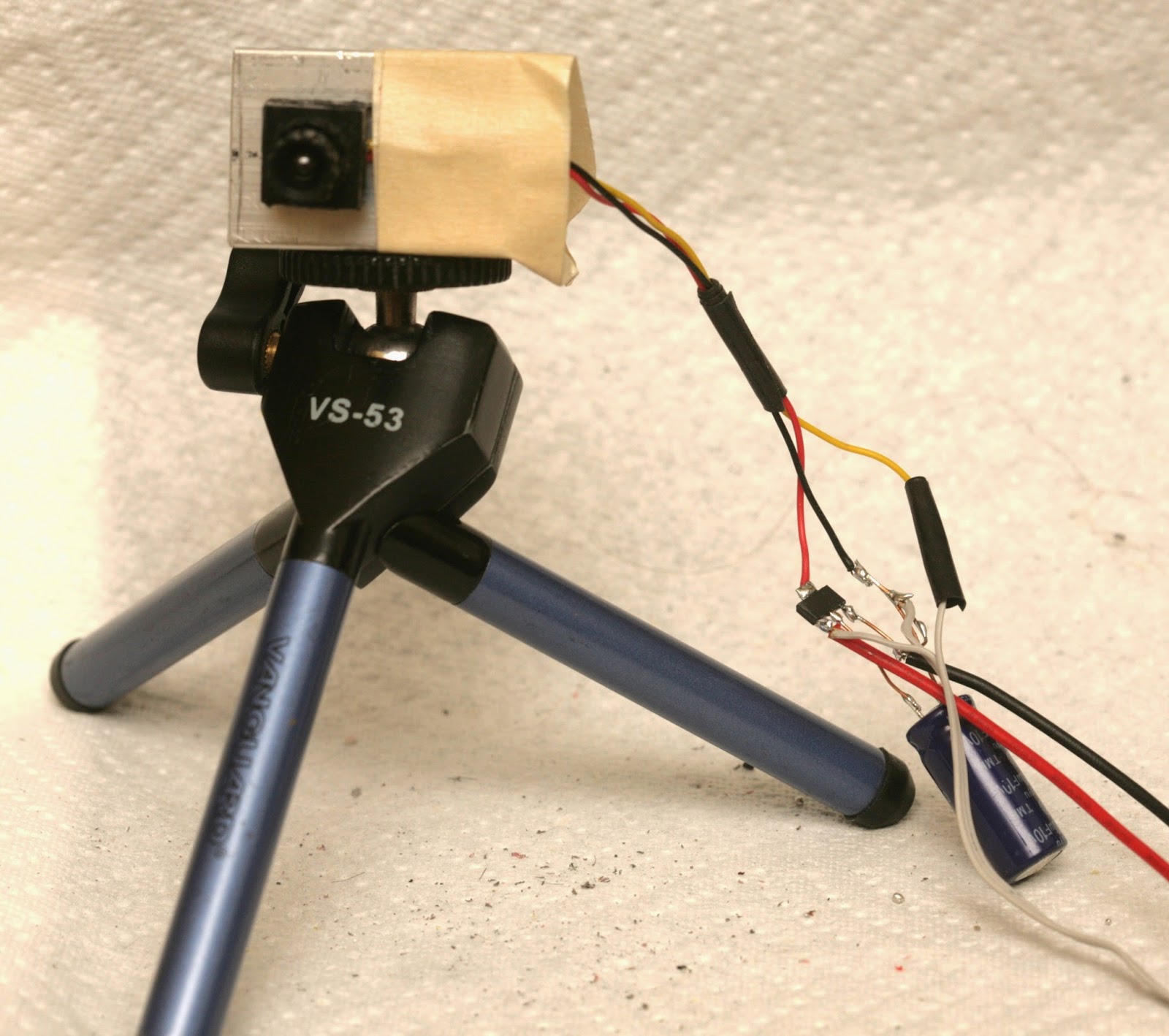

It was finally time to use some of the electronic bits from years ago.

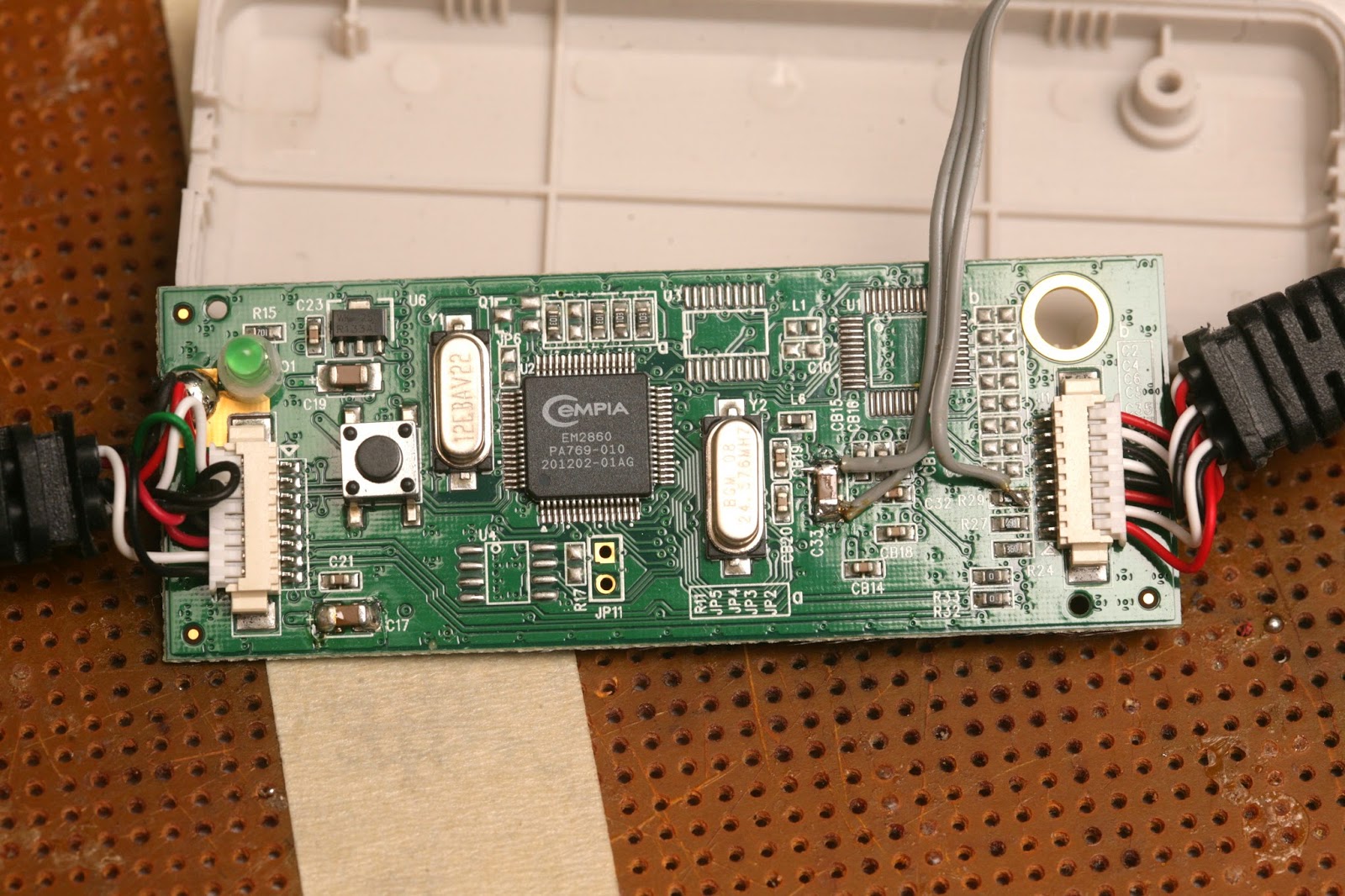

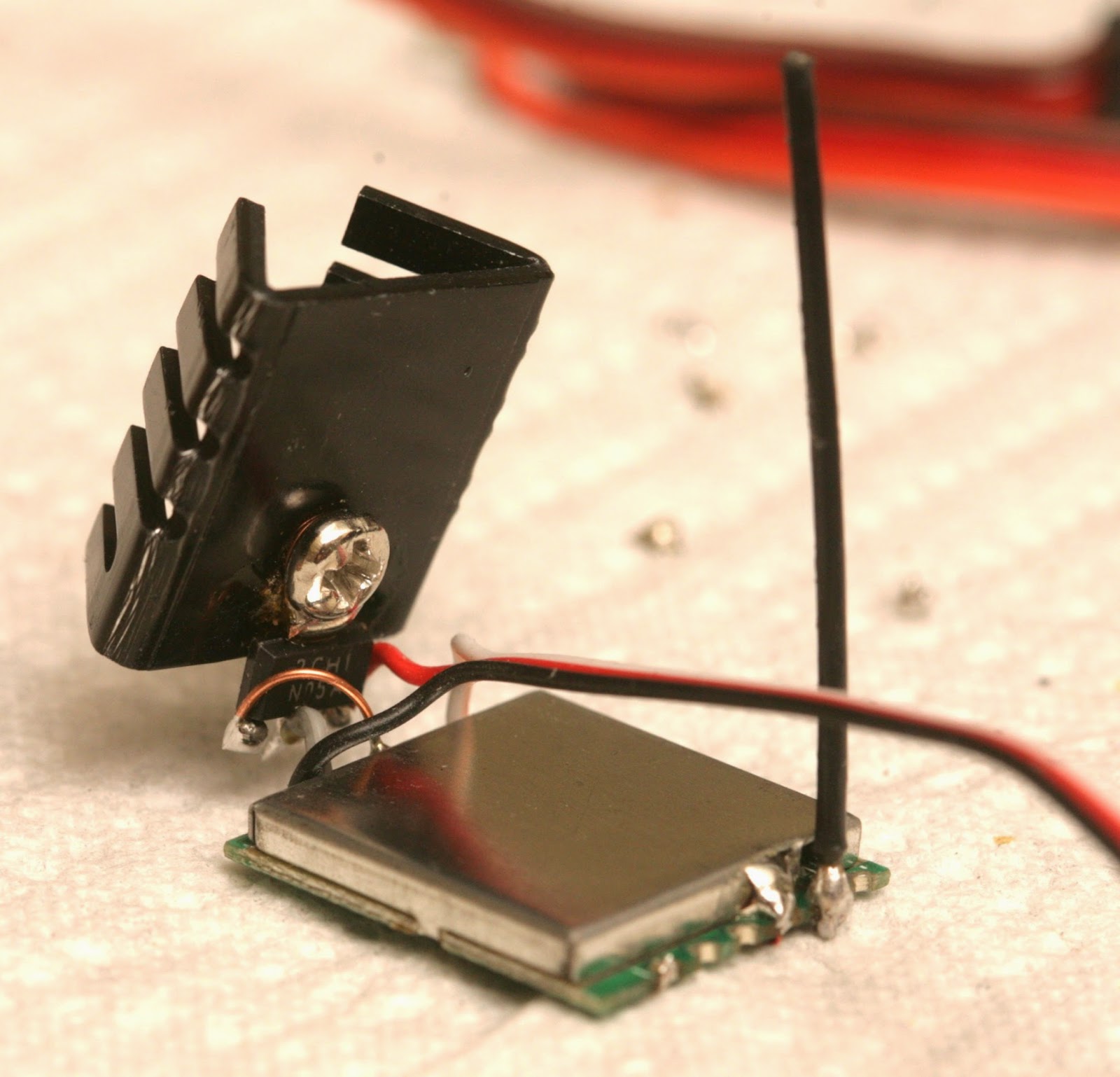

There's a 5.8Ghz receiver labeled rx5808. It needs 3.5-5.5V. A channel pin is grounded to select the channel.

A USB capture board is wired to power the receiver with 3.3V.

There's a 5.8Ghz transmitter labeled tx5823. The same transmitter was sold by many hobby shops with different voltage limits. They all accept 3.3V, though some accept 5V. A channel pin is grounded to select the channel. It needs 400mA & heats to 55C.

There are 2 cameras which need 5V. It took a lot of voltage regulators to finally get the 5.8Ghz system running.

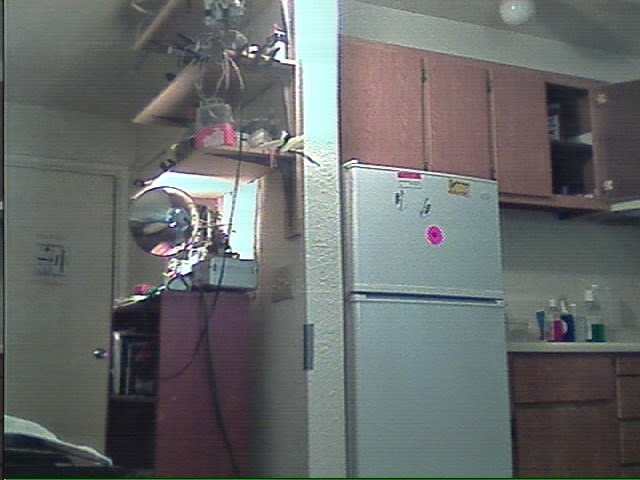

Direct composite video.

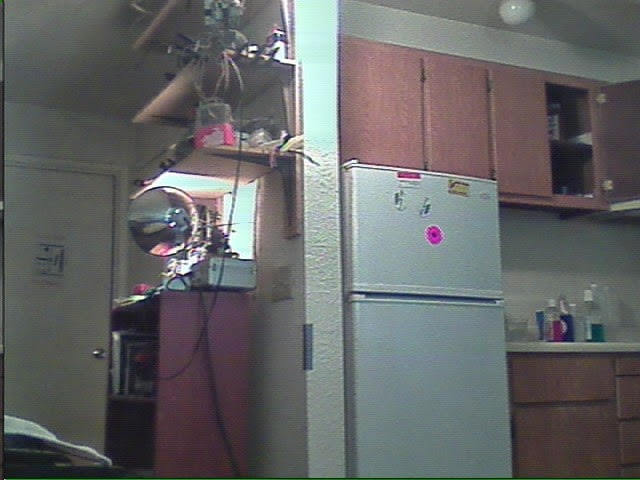

5.8Ghz video. Would actually say there's no difference in quality.

It's finally time to try some markers + low definition 5.8Ghz. Being interlaced requires the resolution to drop down to 720x240.

Barely any detail is visible.

Motion blur wipes out the detail while retaining only color. It was a disappointing result, but it shows how the age of HD video has erased our memories of how bad NTSC was. 1920x1080 is minimum with 4096x2048 the ideal resolution.

The ground station needs to record the video as well as scan it for position. The only spare part is a laptop. Since the follow cam's mane mission would be capturing running, the need to carry a laptop has been the showstopper.

For portable computing, the long reigning Odroid is dead. You need to be buying the Minnow Max. Unlike the boat, the Minnow has a dual core 1.3Ghz x86_64. It should be far ahead of the quad core ARMs. Personally have gotten nothing but grief from quad core ARMs.

Comments

Wouldn't a plenoptic camera be better suited for this?

It might be GPS + baro??? GPS altitude isn't very accurate.

You are right, it looks very fast and smooth, however, it stumbles when the target changes direction. GPS has a slow update rate, so I am guessing they extrapolate the target position between updates. This would allow for a smoother shot and explain the slight overshoot when the target changes direction. Or maybe the overshoot is caused by GPS delay??? Not sure....

It might interfere with a transmitter. Not sure though. 2.4 Ghz has a lot of channel hopping.

A larger problem is mounting the base station on a multirotor. The base station currently sits at a fixed altitude, a fixed heading, and fixed velocity/position. Also it wouldn't be able to account for the changing orientation of the multirotor.....massive gimbal????

@Jack, what are your thoughts on using an IMU + GPS based tracker and extrapolating position between GPS updates? I feel that could provide a fairly accurate solution.

@Daniel - You are right. The Solo is so fast I thought they were doing something special but as you say it's based on GPS. I think it might even use 2.4 for communication which would be bad for us eh?

How does solo shot work? I was under the assumption it used GPS or GPS + Inertial solution.

I think using GPS for follow me isn't a very good idea. Optical techniques are just crazy for complexity. Here's a fairly simple and cheap solution: I wonder how a Solo Shot would handle being mounted on a multirotor? Hmm, another project in the making?

you don't want to go high quality camera if you want better real time tracking you want to go low quality camera.

bugs have been navigating the world with something like 180 pixels cameras for longer than mankind has existed. higher quality camera may look nice to us but it all just becomes information overload specially if you're using a radio link.

NICE IDEA

Hi Jack,

in last months i doing a lot of test with our follow system i agree with you that with the limit of standard gps the result could be good if you are far to the target .

I think that you need a mix of soluction for obtain a good quality of follow ...

This is the result of our actual follow technology that use a mix of 4 different component :

Drone with a Good flight control in the video we use VR uBrain ,custom becon , custom Brushless Gimbal , Post processing stabilization .

I think that we are near the result if we add another simple componet .

Best

Roberto

Piksi remanes vaporware.

Thorough analysis, thanks. Is differential GPS not a viable option(as the price drops)? I feel it could be much more versatile than visual tracking. I don't think people will want to ruin their shot with pink tracking dots.

-

1

-

2

of 2 Next