From IEEE Spectrum:

Just a few weeks ago, we posted about some incredible research from Vijay Kumar’s lab at the University of Pennsylvania getting quadrotors to zip through narrow gaps using only onboard localization. This is a big deal, because it means that drones are getting closer to being able to aggressively avoid obstacles without depending on external localization systems. The one little asterisk to this research was that the quadrotors were provided the location and orientation of the gap in advance, rather than having to figure it out for themselves.

Yesterday, Davide Falanga, Elias Mueggler, Matthias Faessler, andProfessor Davide Scaramuzza, who leads the Robotics and Perception Group at the University of Zurich, shared some research that they’ve just submitted to ICRA 2017. It’s the same kind of aggressive quadrotor maneuvering, except absolutely everything is done on board, including obstacle perception. It doesn’t get any more autonomous than this.

Let’s be clear about this: to autonomously fly through these gaps (which are only 1.5 times the size of the robot, with a mere 10 centimeter of clearance on each side), the quadrotor is using a 752 x 480-pixel monochrome camera with a 180-degree field of view lens, and a PX4FMU autopilot with an IMU and a smartphone-class single board Odroid XU4 computer running ROS. That’s it. After passing through the gap, the quadrotor also uses a downward-facing distance sensor and camera to stabilize itself. All of the sensing and computation is taken care of on board the quadrotor, meaning that you could do this exact same thing in your house.

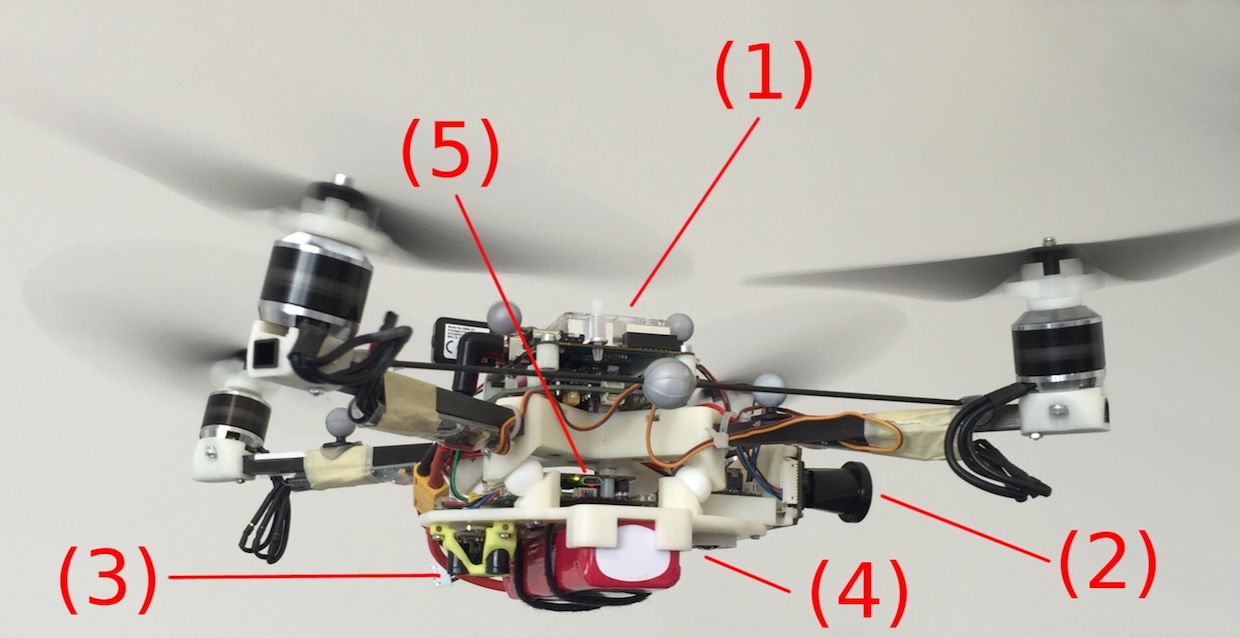

Photo: Robotics and Perception Group at the University of ZurichThe quadrotor platform used in the experiments. (1) Onboard computer. (2) Forward-facing fisheye camera. (3) TeraRanger One distance sensor and (4) downward-facing camera, both used solely during the recovery phase. (5) PX4 autopilot. The motors are tilted by 15º to provide three times more yaw-control action, while only losing 3 percent of the collective thrust.

Photo: Robotics and Perception Group at the University of ZurichThe quadrotor platform used in the experiments. (1) Onboard computer. (2) Forward-facing fisheye camera. (3) TeraRanger One distance sensor and (4) downward-facing camera, both used solely during the recovery phase. (5) PX4 autopilot. The motors are tilted by 15º to provide three times more yaw-control action, while only losing 3 percent of the collective thrust.

Most of this hardware is relatively standard stuff, although the overall platform is custom. The notable tweak is that the rotors have been tilted by 15 degrees, which triples the amount of yaw control without significantly affecting the available thrust. It’s critical to have powerful yaw control since the quadrotor reaches angular speeds of up to 400 degrees per second while approaching a gap.

The actual process of flying through the window goes like this: first, the quadrotor locates the gap (of a known size*) with its onboard camera. It then computes a trajectory to pass through the gap that keeps the quadrotor as far as possible from the edges, which is what you want to do to not run into stuff, while also trying to keep the gap itself in view of the quadrotor’s camera as much as possible. This trajectory is very focused on gap traversal, so the starting point for it involves the quadrotor moving at high speed and possibly oriented a little bit sideways, so the system also needs to come up with a second trajectory that takes the robot from a stable hover into the gap traversal trajectory. Put these two trajectories together, and you’ve got your path through the gap.

Once the robot starts heading for the gap, it does its best to keep its camera pointed at the frame of the gap to continually update its state estimation relative to the space it’s trying to squeeze through and dynamically replan its trajectory as necessary. Assuming it makes it through successfully (which happens about 80 percent of the time), the final step is for the quadrotor to “catch” itself, recovering from whatever crazy speed and orientation it ends up in. It seems like this would be particularly tricky (and it is), but the researchers had already solved this problem in the context of stabilizing quadrotors that are thrown into the air to launch them.

Just in case you were thinking that this kind of thing is easy, the researchers asked two Swiss professional drone-racing pilots to give it a try:

Skills like this are very cool (for humans and robots alike), and it’s hard to understate the importance of being able to run all sensing and computation on board the robot itself. This is a requirement for actually using these skills in a way that’s practical: it’s not just about getting drones to fly through windows, but more about teaching them to be able to reliably maneuver around all kinds of different obstacles, in any environment, from forests to urban areas to your living room.

For more details on this research, we spoke with Professor Scaramuzza:

IEEE Spectrum: What was the biggest challenge of this work, and how did you solve it?

Davide Scaramuzza: The biggest challenge was to couple perception and control, which are typically considered separately. Indeed, for the robot to localize with respect to the gap, a trajectory should be selected, which guarantees that the quadrotor always faces the gap and should be re-planned multiple times during its execution to cope with the varying uncertainty of the state estimate (the uncertainty increases quadratically with the distance from the gap) while respecting the vehicle dynamics. Furthermore, during the traverse, the quadrotor should maximize the distance from the edges of the gap to avoid collisions. It is not trivial to combine all these constraints into a single path-planning problem, since the set of "feasible trajectories" reduces significantly as the quadrotor approaches the gap. Also, the gap is no longer visible when the vehicle is close to the gap, which makes it necessary to execute the traverse without any visual feedback (i.e., blindly).

We solved these problems using a two step approach. To traverse the gap, we compute a trajectory which can be executed blindly thanks to its short duration and to the fact that it requires precomputed constant inputs (namely, a collective thrust of given magnitude and zero angular velocities). To approach the gap, we use a trajectory generation method that allows us to evaluate a very large set of candidate trajectories; for each candidate trajectory, we compute the optimal vehicle orientation that allows the quadrotor to align its onboard camera as much as possible with the gap direction. Within a short amount of time then we select the best trajectory as the one that guarantees that the gap is always visible and the center of the gap is as close as possible to the center of the image. This method has the additional benefit of being extremely fast, allowing us to replan the approach trajectory during its execution to exploit the more accurate pose estimate we get when we are closer to the gap.

Can the drone fly through several gaps in a row? What are the constraints on its performance?

Yes, if we modify the approach to allow the drone to continue flying after the traverse rather locking into a hovering position. The main constraint is the agility of the vehicle, which can be translated into constraints on its weight/inertia. Using a small and very agile quadrotor, it gets easier to stabilize after passing through a gap before immediately approaching another one. On the other hand, if the vehicle is heavy, recovering after such an aggressive maneuver takes longer before the drone is ready to fly again towards the next gap. To summarize: as the vehicle mass shrinks, we can reduce the distance between the the gaps.

Can this research be applied to other kinds of high speed maneuvering, like avoiding tree branches, or urban obstacles like lamp posts?

Of course! Avoiding obstacles such as tree branches or lamp posts is our next challenge. These scenarios are conceptually similar and our approach can be adapted such that, beside being perception aware (i.e., the pole or tree always visible in the image), the distance from the pole is now minimized (to minimize the overall flight time) while ensuring that is there is no collision.

How difficult will it be for commercial or recreational drones to take advantage of this research?

It will not be difficult. Hardware-wise, we only need an onboard camera, an IMU, and computer. Nowadays, almost any commercially-available drone has this hardware and with the right algorithms one can take advantage of our research to let autonomous quadrotors safely avoid obstacles or, as in our case, literally pass through them.

What are you working on next?

We are planning to extend our work into different directions. One is about passing through multiple gaps without any stop; instead by allowing a smooth and fast behavior to traverse a gap after the other. Other possible extensions are passing through a swinging gap or passing through a static gap along with a suspended payload. Finally, also slalom among trees, poles, or other similar obstacles.

“Aggressive Quadrotor Flight through Narrow Gaps with Onboard Sensing and Computing,” by Davide Falanga, Elias Mueggler, Matthias Faessler and Davide Scaramuzza from the Robotics and Perception Group, University of Zurich, Switzerland, has been submitted to ICRA 2017 which will take place next May in Singapore.

[ RPG @ UZH ]

* So, I guess technically it could get slightly more autonomous than this.

Comments

So I take it from lack of response, that none of this work is being merged into PX4 Master or will be offered to the world as Free Open Source.

I wonder how much better the vision software could work if it was operating on top of a flight control stack that simply flew better and didn't wobble?

Agreed Andreas. But you know, baby-steps. ;) But you're right, I'm not expecting we'll be seeing monocular tree recognition in operation in the next year. At least, not with these tiny little processors on board.

This is super cool but it looks as if the algorithm is made to find a big black rectangle in an environment without many big black rectangle type things. The question that still needs to be answered is, can we use a cam and onboard computation to correctly identify both a branch at 40 cm and a helicopter at 600 m against a random background? The kind of computation that takes a few grams of visual cortex in animals.

Well, that's what I'm wondering. If the wobble is simply due to the un-optimized control loops in PX4. They have not yet done throttle and voltage linearization, so this leads to the used-to-be-classic overgain-wobble when trying to stabilize at full throttle such as they are doing during the recovery phase. Or it could be because of the lack of throttle ceiling management, again which manifests as lack of stability at full throttle.

I say used-to-be-classic because this has been solved in Ardupilot for over a year, but PX4 is quite behind in this regard.

Or was the wobble due to the optical flow position tracking. I'm going to guess no. The wobble is so rapid, that I'd be the optical flow system isn't even working until that settles down. The camera can only deal with pixel flow rates up to a certain speed, and I'm quite sure that wobble would exceed it.

So, it's probably just due to the poor stabilization control of PX4. Which in turn actually hurts the recovery performance here as the OF camera can't lock on to a target until the wobbling stops.

Or it might be be because its using only optic flow during recovery for position tracking hence why there is a carpet with high contrast patterns after the window. But have not read the paper but that is my guess from the title of the paper

...because they are pushing a linear control system to the edge of its stability window to achieve the required agility...?

Cool stuff. I like seeing this stuff done on-board as the off-board stuff has limited utility. So are the code developments from this work being merged into PX4 Master?

Why is the recovery after passing through the gap so wobbly?