SYDNEY— Daniel Parfitt thought he’d found the perfect drone for a two-day mapping job in a remote patch of the Australian Outback. The roughly $80,000 machine had a wingspan of 7 feet and resembled a stealth bomber.

There was just one problem. His machine raised the hackles of one prominent local resident: a wedge-tailed eagle.

Swooping down from above, the eagle used its talons to punch a hole in the carbon fiber and Kevlar fuselage of Mr. Parfitt’s drone, which lost control and plummeted to the ground.

“I had 15 minutes to go on my last flight on my last day, and one of these wedge-tailed eagles just dive-bombed the drone and punched it out of the sky,” said Mr. Parfitt, who believed the drone was too big for a bird to damage. “It ended up being a pile of splinters.”

Weighing up to nine pounds with a wingspan that can approach eight feet, the wedge-tailed eagle is Australia’s largest bird of prey. Once vilified for killing sheep and targeted by bounty hunters, it is now legally protected. Though a subspecies is still endangered in Tasmania, it is again dominating the skies across much of the continent.

These highly territorial raptors, which eat kangaroos, have no interest in yielding their apex-predator status to the increasing number of drones flying around the bush. They’ve even been known to harass the occasional human in a hang glider.

Birds all over the world have attacked drones, but the wedge-tailed eagle is particularly eager to engage in dogfights, operators say. Some try to evade these avian enemies by sending their drones into loops or steep climbs, or just mashing the throttle to outrun them.

A long-term solution remains up in the air. Camouflage techniques, like putting fake eyes on the drones, don’t appear to be fully effective, and some pilots have even considered arming drones with pepper spray or noise devices to ward off eagles.

They are the “ultimate angry birds,” said James Rennie, who started a drone-mapping and inspection business in Melbourne called Australian UAV. He figures that 20% of drone flights in rural areas get attacked by the eagles. On one occasion, he was forced to evade nine birds all gunning for his machine.

The birds are considered bigger bullies than their more-docile relatives, such as the bald and golden eagles in the U.S. Wedge-tailed eagles are the undisputed alpha birds in parts of Australia’s interior but it’s not entirely clear why they’re so unusually aggressive towards drones. Scientists say they go after drones probably because they view them as potential prey or a new competitor.

“They’re really the kings of the air in Australia,” said Todd Katzner, a biologist and eagle expert at the U.S. Geological Survey in Boise, Idaho. “There’s nothing out there that can compete with them.”

The problem is growing more acute as Australia makes a push to become a hot spot for drones. One state, Queensland, recently hosted the “World of Drones Congress” and last year gave about $780,000 to Boeing Co. for drone testing. Amazon.com is expanding in Australia and could try using drones for deliveries, and the machines are increasingly favored by big landowners such as miners and cattle ranchers.

The eagles will often attack in male-female pairs, and they aren’t always deterred if their first foray fails. Sometimes they will come from behind, attack in tandem from above, or even stagger their assault. A drone operator may evade one diving eagle with an upward climb, but the second eagle can then snatch it, Mr. Rennie said.

“If you take your eye off that aircraft even for a couple of minutes, the likelihood is it will end up in pieces on the ground,” he said.

In late 2015, Andrew Chapman, a co-owner at Australian UAV, was mapping a quarry and landfill site near Melbourne, and figured it was close enough to the city that an eagle attack was unlikely. But when the drone was about half a mile away, an eagle “materialized out of thin air and knocked out the drone,” Mr. Chapman said. He spent two days looking for the machine, worth about $35,000 at today’s retail price, and had to ship it to the manufacturer in Switzerland for repairs.

More exotic defenses have been considered. Mr. Chapman said arming drones with pepper spray was discussed but quickly discarded, out of concern it could harm the birds.

“It’s a relief to be planning for jobs overseas because we know the wedgies aren’t there,” said Mr. Chapman, using the local nickname for the bird.

Rick Steven, a survey superintendent at the St. Ives gold mine in Western Australia, who uses drones to survey the pits, debated using something like a ShuRoo—a device mounted on cars that makes noise, which humans can’t hear, to keep kangaroos off the road. But he was concerned it would be cumbersome on the drone and may not ward off eagles anyway.

Instead, Mr. Steven and other drone operators make use of another weapon: time. The eagles are less active in the early morning, because the thermals—columns of rising air—they use to fly don’t develop until later in the day after the sun has warmed the ground.

In his first 2½ years flying drones at the mine, Mr. Steven said he lost 12 drones to eagle attacks, which cost his employer, South Africa-based Gold Fields Ltd. , some $210,000. During the past year, when he focused his flying in the morning, he has lost two—with two more close calls.

Any successes at deterring wedge-tailed eagle attacks in Australia could provide clues in how to minimize avian obstacles in other regions.

“Every time I go to a conference on birds and they’re having a workshop on drones, somebody tells me about this problem in Australia, about these wedge-tailed eagles,” said David Bird, a retired wildlife biology professor in Canada and founding editor of the Journal of Unmanned Vehicle Systems.

Mr. Parfitt, who began his drone business Aerial Image Works about three years ago, remains vigilant. Each of his last three jobs attracted an eagle attack.

Other birds will “fly at the drone and they’ll act in a very aggressive manner, but they don’t actually touch you,” he said. “I’m not scared of anything else attacking my drone except the wedge-tailed eagle.”

Write to Mike Cherney at mike.cherney@wsj.com

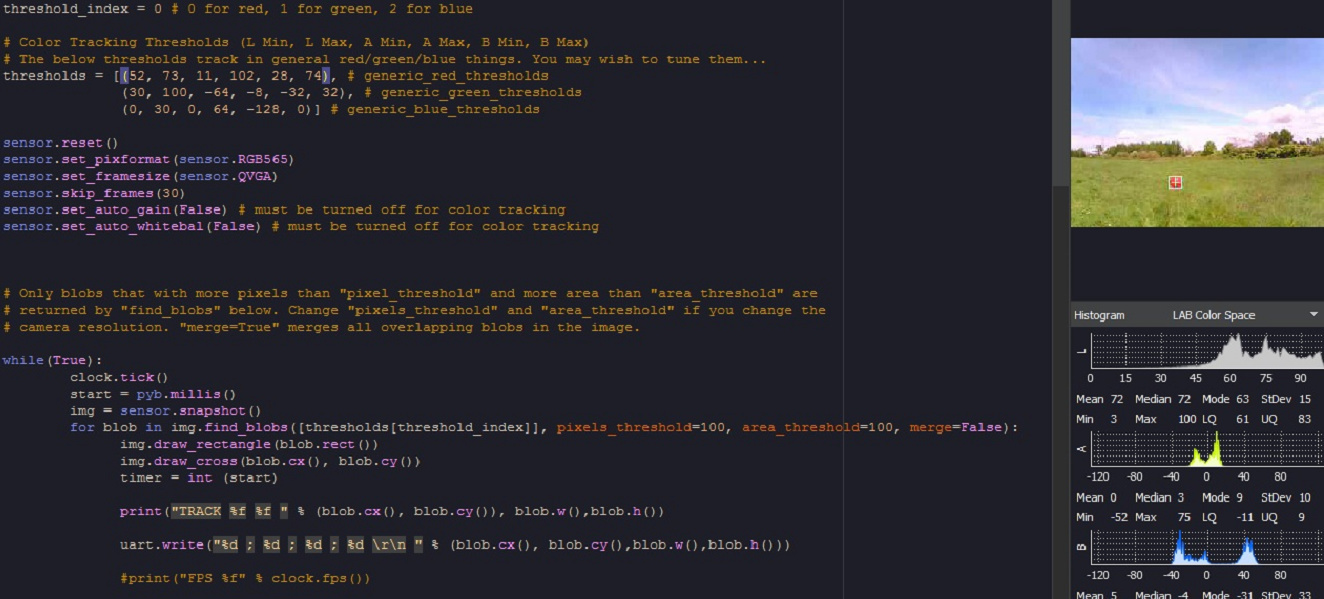

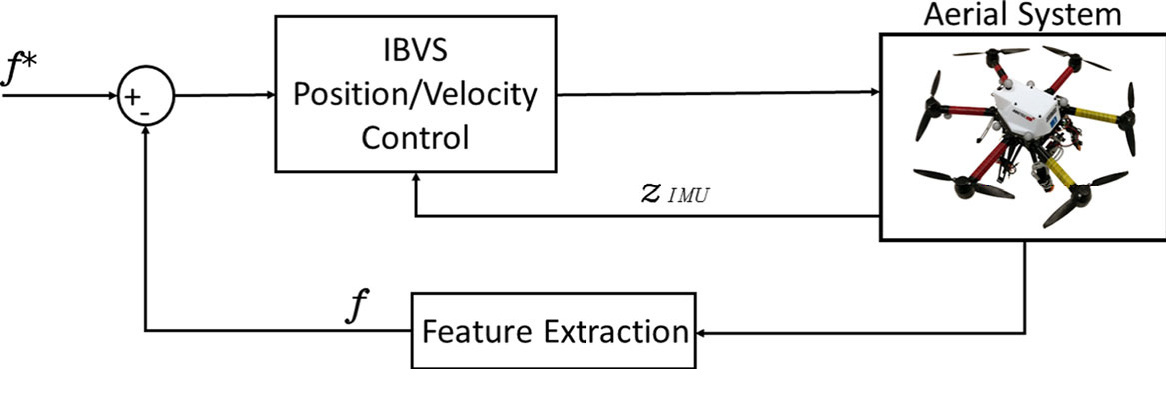

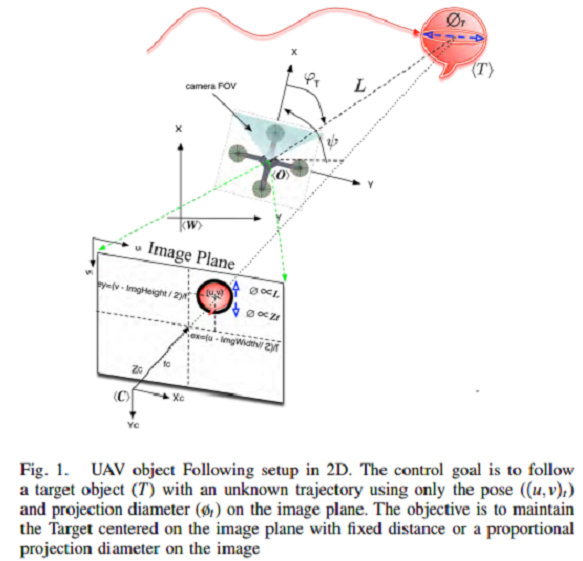

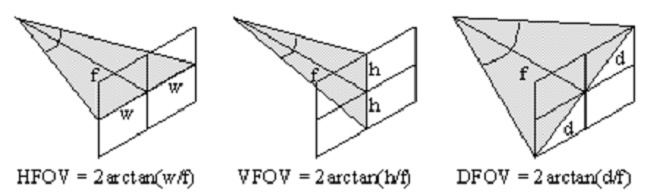

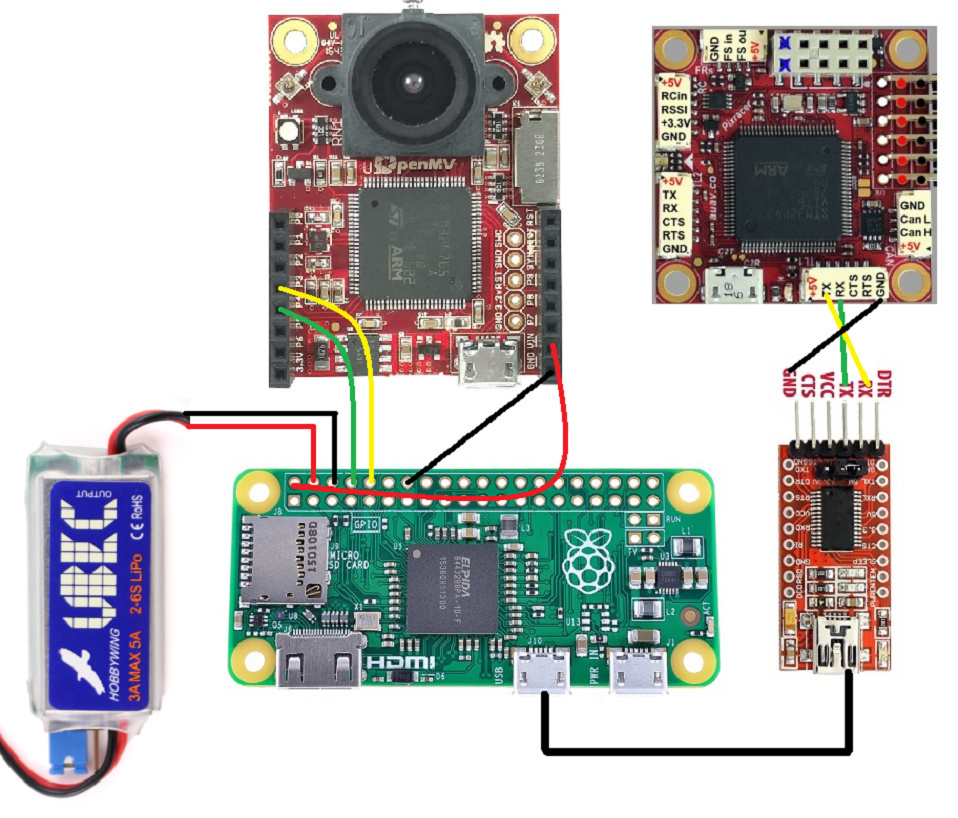

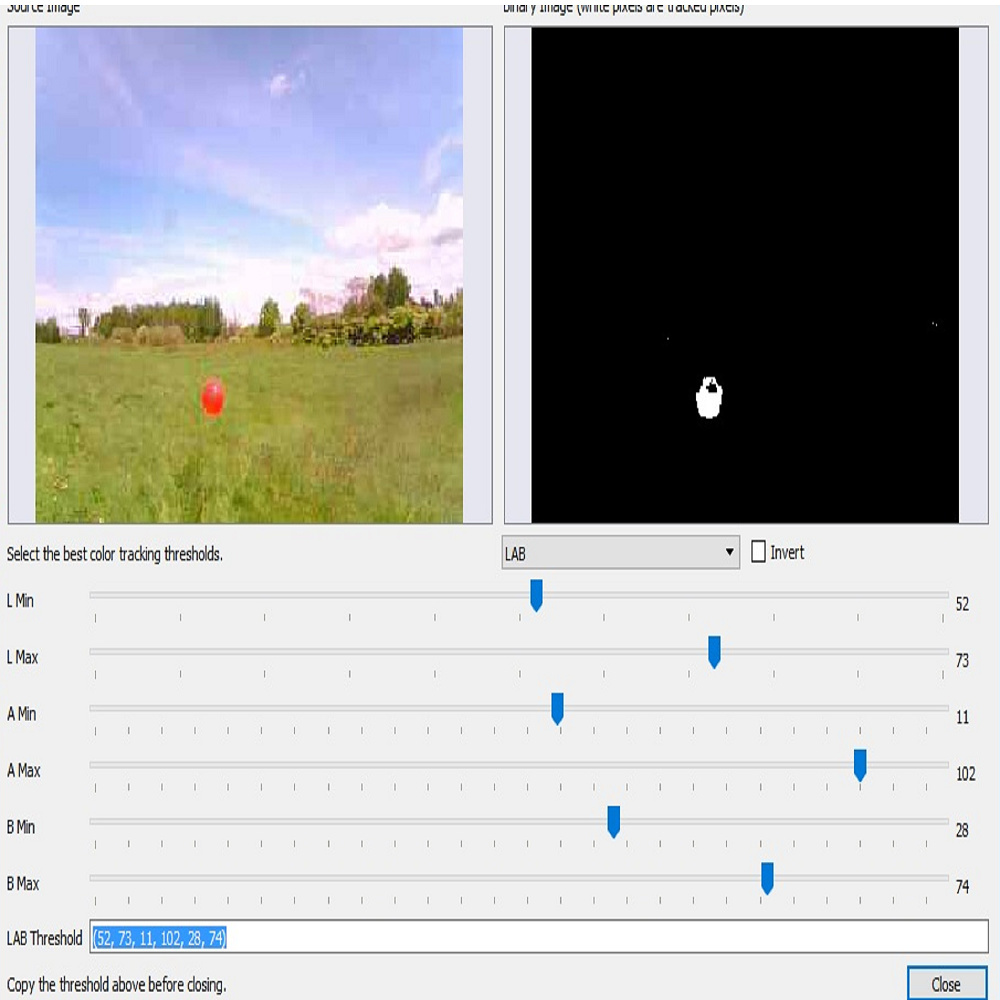

Researchers built their drone with off-the-shelf components to reduce costs.

Researchers built their drone with off-the-shelf components to reduce costs.