In the early 1930s, Reginald Denny, an English actor living in Los Angeles, stumbled upon a young boy flying a rubber band-powered airplane. After attempting to help the boy by adjusting the rubber and control surfaces, the plane spun into the ground. Denny promised he would build another plane for the boy, and wrote to a New York model manufacturer for a kit. This first model airplane kit grew into his own hobby shop on Hollywood Boulevard, frequented by Jimmy Stewart and Henry Fonda.

The business blossomed into Radioplane Co. Inc., where Denny designed and built the first remote controlled military aircraft used by the United States. In 1944, Captain Ronald Reagan of the Army Air Forces’ Motion Picture unit wanted some film of these new flying targets and sent photographer David Conover to the Radioplane factory at the Van Nuys airport. There, Conover met Norma Jeane Dougherty and convinced her to go into modeling. She would later be known as Marilyn Monroe. The nexus of all American culture from 1930 to 1960 was a hobby shop that smelled of balsa sawdust and airplane glue. That hobby shop is now a 7-Eleven just off the 101 freeway.

Science historian James Burke had a TV wonderful show in the early 90s – Connections – where the previous paragraphs would be par for the course. Unfortunately, the timbre of public discourse has changed in the last twenty years and the worldwide revolution in communications allowing people to instantaneously exchange ideas has only led to people instantaneously exchanging opinions. The story of how the Dutch East India Company led to the rubber band led to Jimmy Stewart led to remote control led to Ronald Reagan led to Death of a Salesmanhas a modern fault: I’d have to use the word ‘drone’.

The word ‘propaganda’ only gained its negative connotation the late 1930s – it’s now ‘public relations’. The phrase ‘global warming’ doesn’t work with idiots in winter, so now it’s called ‘climate change’. Likewise, quadcopter pilots don’t want anyone to think their flying machine can rain hellfire missiles down on a neighborhood, so ‘drone’ is verboten. The preferred term is quadcopters, tricopters, multicopters, flying wings, fixed-wing remote-controlled vehicles, unmanned aerial systems, or toys.

I’m slightly annoyed by this and by the reminder I kindly get in my inbox every time I use the dreaded d-word. The etymology of the word ‘drone’ has nothing to do with spying, firing missiles into hospitals, or illegally killing American civilians. People like to argue, though, and I need something to point to when someone complains about my misuse of the word ‘drone’. Instead of an article on Hollywood starlets, the first remote control systems, and model aviation, you get an article on the etymology of a word. You have no one else to blame but yourself, Internet.

An Introduction, and Why This Article Exists

This article is purely about the etymology of the word ‘drone’. Without exception, every article and blog post I read while researching this topic failed to consider whether an unmanned or remotely piloted aircraft was called a ‘drone’ before its maiden flight, or while it was being developed. For example, numerous articles refer to the Hewitt-Sperry Automatic Airplane as the first ‘drone’. For the purposes of this article, this is patently untrue. The word ‘drone’ was first applied to unmanned aircraft in late 1934 or early 1935, and a World War I-era experiment could never be considered a drone by contemporaneous sources. Consider this article a compendium of the evolution of the word ‘drone’ over time.

Why this article belongs on Hackaday should require no explanation. This is one of the Internet’s largest communities of grammar enthusiasts, peculiarly coming from a subculture where linguistic play (and exceptionally dry sarcasm) is encouraged. Truthfully, I am so very tired of hearing people complain about the use of the word ‘drone’ when referring to quadcopters and other remote-controlled toys. To me, this article simply exists as something I can point to when telling off offended quadrotor pilots. I am considering writing a bot to do this automatically. Perhaps I will call this bot a ‘drone’.

The Source of ‘Drone’ c. 1935

Before the word was used to describe aircraft, ‘drone’ had two meanings. First as a continuous low humming sound, and second as a male bee. The male bee does no work, gathers no honey, and only exists for the purpose of impregnating the queen. It’s not hard to see why ‘drone’ is the perfect word to describe a quadcopter — a Phantom is mindless, and sounds like a sack full of bees. Where then did the third definition of ‘drone’ come from, a flying machine without a pilot on board?

Before the word was used to describe aircraft, ‘drone’ had two meanings. First as a continuous low humming sound, and second as a male bee. The male bee does no work, gathers no honey, and only exists for the purpose of impregnating the queen. It’s not hard to see why ‘drone’ is the perfect word to describe a quadcopter — a Phantom is mindless, and sounds like a sack full of bees. Where then did the third definition of ‘drone’ come from, a flying machine without a pilot on board?

The most cited definition of ‘drone’ comes from a 2013 Wall Street Journal article [1] from linguist and lexicographer Ben Zimmer, tracing the first use of the word to 1935. In this year, US Admiral William H. Standley witnessed a British demonstration of the Royal Navy’s new remote-controlled aircraft for target practice. The aircraft used was based on the de Havilland Tiger Moth, a biplane trainer built in huge numbers during the interwar period, redesignated as the Queen Bee. The implication of Zimmer’s article is that the word ‘drone’ comes from the de Havilland Queen Bee. This etymology is repeated in a piece in the New York Times Magazine published just after World War II [2]:

Drones are not new; inventors were experimenting with them twenty-five years ago. Before the war, small specially built radio-controlled planes were used for anti-aircraft purposes – widely in England, where the name “drone” originated, less extensively here…. The form of radio control used in the experimental days was developed and refined so that it could be applied to nearly any type of conventional plane.

I found this obvious primary source for Ben Zimmer’s etymology of drone in five minutes, but it doesn’t tell anyone if the Queen Bee designation of a remote-controlled biplane came about from the word ‘drone’ or vice versa. This etymology doesn’t really give any information about the technical capabilities or the tactical use of these drones. The unmanned aircraft discussed in the New York Times article would be better called a cruise missile, not a drone. Was the Queen Bee an offensive drone, or was it merely a device built for target practice? These are questions that need to be answered if we’re going to tell the people flying Phantoms to buzz off with their drones.

The Queen Bee, with Churchill

Biology sometimes mirrors linguistics, and the best place to look for the history of ‘drone’, then, is to look into the history of the Queen Bee. The Queen Bee – not its original name – was born out of a British Air Ministry specification 18/33. At the time, the Air Ministry issued several specifications every year for different types of aircraft. The Supermarine Spitfire was originally known to the military as F.37/34; a fighter, based on the thirty-seventh specification published in 1934. Therefore, the specification for a ‘radio-controlled fleet gunnery target aircraft’ means the concept of what a ‘drone’ would be was defined in 1933. Drones, at least in the original sense of a military aircraft, are not offensive weapons. They’re target practice, with similar usage entering the US Navy in 1936, and the US Air Force in 1948. The question remains, did ‘drone’ come before the Queen Bee, or is it the other way around?

The first target drone was built between late 1933 and early 1935 at RAF Farnborough by combining the fuselage of the de Havilland Moth Major with the engine, wings, and control surfaces of the de Havalland Tiger Moth [3]. The aircraft was tested from an airbase, and later launched off the HMS Orion for target practice. Gunnery crews noticed a particularly strange effect. This aircraft never turned, never pitched or rolled, and never changed its throttle position: this aircraft droned. It made a loud, low hum as it passed overhead. Drones are named for the hum, and the Queen Bee is just a clever play on words.

The word ‘drone’ does not come from the de Havilland Queen Bee, because the Queen Bee was originally a de Havilland Moth Major and Tiger Moth. ‘Queen Bee’, in fact, comes from ‘drone’, and ‘drone’ comes from the buzzing sound of an airplane flying slowly overhead. There’s a slight refinement of the etymology for you: the Brits brought the bantz, and a de Havilland was deemed a drone.

A ‘Drone’ is for Target Practice, 1936-1959

The word ‘drone’ entered the US Navy’s lexicon in 1936 [4] shortly after US Admiral William H. Standley arrived back from Europe, having viewed a Queen Bee being shot at by gunners on the HMS Orion. This would be the beginning of the US Navy’s use of the phrase, a term that would not officially enter the US Army and US Air Force’s lexicon for another decade.

Beginning in 1922, the US Navy would use an aircraft designation system to signify the role and manufacturer of any aircraft in the fleet. For example, the fourth (4) fighter (F) delivered to the Navy built by Vought (U) was the F4U Corsair. The first patrol bomber (PB) delivered by Consolidated (Y) was the PBY Catalina. In this system, ‘Drone’ makes an appearance in 1936, but only as ‘TD’, target drone, an airplane designed to be shot at for target practice.

A QB-17 drone at Holloman AFB, 1959.

For nearly twenty years following the introduction of the word into military parlance, ‘drone’ meant only a remote controlled aircraft used for target practice. B-17 and PB4Y (B-24) bombers converted to remote control under Operation Aphrodite and Operation Anvil were referred to as ‘guided bombs’. Just a few years after World War II, quite possibly using the same personnel and the same radio control technology that was developed during Operation Aphrodite, war surplus B-17s would be repurposed for use as target practice, where they would be called target drones. Obviously, ‘drone’ meant only target practice until the late 1950s.

If you’re looking for a proper etymology and definition of the most modern sense of the word ‘drone’, there you have it. It’s a remote-controlled plane designed for target practice. For the quadcopter pilots who dabble in lexicography, have an interest in linguistic purity, and are utterly offended by calling their flying camera platform a ‘drone’, there’s the evidence. A ‘drone’ has nothing to do with firing weapons down on a population or spying on civilians from forty thousand feet. In the original sense of the word, a drone is simply a remote-controlled aircraft designed to be shot at.

Language changes, though, and to successfully defend against all critics of my use of the word ‘drone’ as applying to all remote controlled aircraft, I’ll have to trace the usage of the word drone up to modern times.

The Changing Definition of ‘Drone’, 1960-1965

A word used for a quarter century will undoubtedly gain a few more definitions, and in the early 1960s, the definition of ‘drone’ was expanding from an aerial target used by British forces in World War II to a word that could be retroactively applied to the German V-1, an aerial target used by the British forces in World War II.

The next evolution of the word ‘drone’ can be found in the New York Times, November 19, 1964 edition [5], again from Pulitzer Prize-winning author Hanson W. Baldwin. Surely the first reporter on the ‘drone’ beat has more to add to the linguistic history of the word. In the twenty years that passed since Mr. Baldwin introduced the public to the word ‘drone’, a few more capabilities have been added to these unmanned aircraft:

Drone, or unpiloted aircraft, have been used for military and experimental purposes for more than a quarter of a century.

Since the spectacular German V-1, or winged missile, in World War II, advances in electronics and missile-guidance systems have fostered the development of drone aircraft that appear to be almost like piloted craft in their maneuverability.

The description of the capabilities of drones continues on to anti-submarine warfare, battlefield surveillance, and the classic application of target practice. Even in the aerospace industry, the definition of ‘drone’ was changing ever so slightly from a very complex clay pigeon to something slightly more capable.

In the early 1960s, NASA was given the challenge of putting a man on the moon. This challenge requires docking spacecraft, and at the time Kennedy issued this challenge, no one knew how to perform this feat of orbital mechanics. Martin Marietta solved this problem, and they did it with drones.

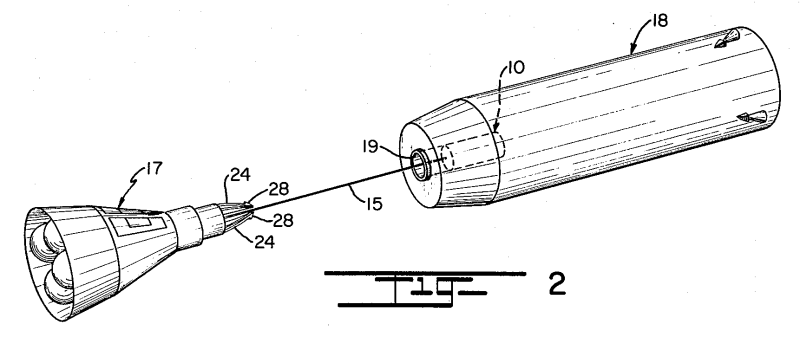

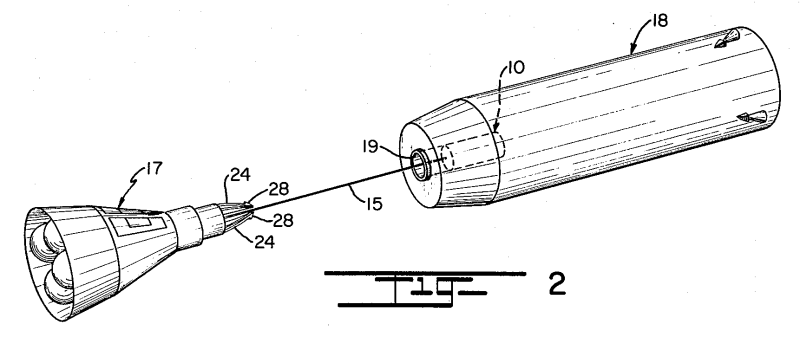

US patent 3,201,065 solves the problem of docking two spacecraft and does it with a drone.

Orbital docking was a problem NASA needed to solve before getting to the moon, and the solution came from the Gemini program. Beginning with the Gemini program, astronauts would perform an orbital rendezvous and dock with an unmanned spacecraft launched a few hours or days earlier. Later missions used the engine on the Agena to boost their orbit to world altitude records. The first experiments in artificial gravity came from tethering the Gemini capsule to the Agena and spinning the spacecraft around a common point.

The unmanned spacecraft used in the Gemini program, the Agena Target Vehicle, was not a drone. However, years before these rendezvous and docking missions would pave the way to a lunar landing, engineers at Martin Marietta would devise a method of bringing two spacecraft together with a device they called a ‘drone’ [6].

Martin Marietta’s patent 3,201,065 used an autonomous, remote-controlled spacecraft tethered to the nose of a Gemini spacecraft. Laden with a tank of pressurized gas, a few thrusters, and an electromagnet, an astronaut would fly this ‘docking drone’ into a receptacle in the target vehicle, activate the electromagnet, and reel in the tether bringing two spacecraft together. Here the drone was, like the target drones of World War II, remote-controlled. This drone spacecraft never flew, but it does show the expanding use of the word ‘drone’, especially in the aerospace industry.

If you’re looking an unimaginably cool drone that actually took to the air, you need only look at the Lockheed D-21, a reconnaissance aircraft designed to fly over Red China at Mach 3.

The M-21 carrier aircraft and D-21 drone. The M-21 was a variant of the A-12 reconnaissance aircraft, predecessor to the SR-71 reconnaissance aircraft.

The ‘D’ in ‘D-21’ means ‘daughter’, and the carrier aircraft for this unmanned spy plane is the M-21, ‘M’ meaning ‘mother’. Nevertheless, the D-21 was referred to in contemporary sources as a drone. The D-21 was perhaps the first drone referred to as such that was a pure observation aircraft, meant to spy on the enemy.

The 1960s didn’t just give drones the ability to haul a camera over the enemy. 1960 saw the first offensive drone – the first drone called as such that was able to drop homing torpedos into the ocean above enemy submarines.

The Gyrodyne QH-50 – also know as DASH, the Drone Anti-Submarine Helicopter – was the US Navy’s answer to a problem. At the time, the Soviets were building submarines faster than the United States could build anti-submarine frigates. Older ships were available, but these ships weren’t large enough for a full-sized helicopter. The solution was a drone that could launch off the deck, fly a few miles to an interesting ping on the sonar, and drop a torpedo. The solution was the first offensive drone, the first unmanned aircraft capable of delivering a weapon.

The Gyrodyne QH-50 – also know as DASH, the Drone Anti-Submarine Helicopter – was the US Navy’s answer to a problem. At the time, the Soviets were building submarines faster than the United States could build anti-submarine frigates. Older ships were available, but these ships weren’t large enough for a full-sized helicopter. The solution was a drone that could launch off the deck, fly a few miles to an interesting ping on the sonar, and drop a torpedo. The solution was the first offensive drone, the first unmanned aircraft capable of delivering a weapon.

The QH-50 was a relatively small coaxial helicopter piloted by remote control. It was big enough to haul one torpedo twenty miles away from a ship and have this self-guided torpedo take care of the rest.

The QH-50 was a historical curiosity born from two realities. The US Navy had anti-submarine ships that could detect Soviet subs dozens of miles away. These anti-submarine ships didn’t have torpedos with that range and didn’t have a flight deck to launch larger helicopters. The QH-50 was the result, but new ships and more capable torpedos made this drone obsolete in less than a decade. An otherwise entirely unremarkable weapons platform, the QH-50 has one claim to fame: it was the first drone, referred to as such in contemporary sources, that could launch a weapon. It was the first offensive drone.

The Confusion of the Tounges, c. 1965-2000

On June 13, 1963, a Reuters article reported a joint venture between Britain and Canada to build an unmanned spy plane, specifically referred to as an ‘unmanned aerial vehicle’ [7]. The reporter, with full knowledge of the previous two decades of unmanned aerospace achievement, said this new project was ‘commonly referred to as a drone.’ By the mid-60s, the word ‘drone’ had its fully modern definition: it was simply any unmanned aerial vehicle, used for any purpose, ostensibly controlled in any manner. This definition was being supplanted by several competing terms, including ‘unmanned aerial vehicle’ and ‘remotely piloted vehicle’.

The term ‘drone’ would be usurped in common parlance for the newer, clumsier term, ‘unmanned aerial vehicle.’ A word that once referred to everything from flying targets to spacecraft subsystems would now be replaced. The term ‘unmanned aerial vehicle’ would make its first public military appearance in the Department of Defense report on Appropriations for 1972. The related term ‘remotely piloted vehicle’ or RPV, would first appear in government documents in the late 1980s. From the word drone, a thousand slightly different terms are born in the 60s, 70s, and 80s. Even today, ‘unmanned aerial system’ is the preferred term used by the FAA. This phrase was created less than a decade ago.

Engineers built drones to surveil the Communist Chinese at Mach 3. Engineers patented a drone to dock two spacecraft together. Engineers built drones to hunt and sink submarines. The Air Force took old planes, painted them orange, and called them target drones. So the Lord scattered them abroad over the face of all the Earth, and they ceased calling their aircraft drones.

In the 1970s, 80s, and 90s, the term ‘drone’ would still be applied to target aircraft, and even today is still the preferred term for unmanned military aircraft used for target practice. Elsewhere in the military, the vast array of new and novel applications of unmanned aircraft heave meant new terms have cropped up.

Why these new terms were created is open to debate and interpretation. The military and aerospace companies have never shied away from a plague of acronyms, and a dizzying array of random letters thrown into a report is the easiest way of ensuring operational security. How can the enemy know what we’re doing if we don’t know ourselves? It’s questionable if the improved capabilities of drones, such as dropping torpedos or relaying video, can account for the vast array of acronyms — it appears these new acronyms were simply the creation of a few captains, majors, and engineers either at the Pentagon or one of a dozen aerospace companies. By the 1990s, the word drone was in a state of disuse, replaced by ‘UAV’, ‘RPV’, ‘UAS’, and a dozen other phrases synonymous with the word drone.

The Era of the Modern Drone, October 21, 2001 – Present

The definitive image of the modern drone is that of the General Atomics MQ-1 Predator laden with a Hellfire anti-tank missile on each wing. The Predator is an unmistakable aircraft featuring a bulbous nose just barely large enough to house the satellite antennae underneath. A small camera pod hangs off its chin. The long, thin wings appear as if they were stolen off a glider. A small propeller is mounted directly on the tail, and the unique inverted v-tail gives the impression this aircraft can never land, lest it be destroyed.

The Predator program began in the mid-1990s and was from the get-go referred to as an Unmanned Aerial Vehicle, or UAV. This changed on October 21, 2001, in a Washingon Post article from Bob Woodward. In the article, CIA Told to Do ‘Whatever Necessary’ to Kill Bin Laden, Woodward reintroduced the word ‘drone’ into the vernacular [8]. The drone in question was a CIA-operated Predator equipped with, “Hellfire antitank missiles that can be fired at targets of opportunity” Woodward, either through conversations with military officials, remembering the old term for this type of aircraft, needing a new word to describe this weapon delivery system, or simply being fed up with the alphabet soup of acronyms, chose to use the word ‘drone’.

If you’re angry at the word ‘drone’ being applied to a Phantom quadcopter, you have two people to blame. The first is Hanson W. Baldwin, military editor to the New York Times. Over a career of forty years, he introduced the word ‘drone’ to describe everything to target aircraft to cruise missiles. The second is Bob Woodward of the Washington Post. The man who broke Watergate also reintroduced the word ‘drone’ into the American consciousness.

A Briefer History Of ‘Drone’, and an Argument for its Use

The word ‘drone’ was first applied to unmanned aircraft in late 1934 or early 1935 because biplanes flying low overhead sound like a cloud of bees. For twenty-five years, ‘drone’ applied only to aircraft used as target aircraft. Beginning in the late 1950s and early 1960s, the definition of ‘drone’ expanded to included all unmanned aircraft, from cruise missiles to spaceships. Around 1965, acronyms such as ‘UAV’, and ‘RPV’ took over as being either more descriptive or as a function of the military aerospace industry’s obsession with acronyms. In the late 1990s, the US Air Force and CIA began experimenting with Predator UAVs and Hellfire missiles. The first use of this weapons platform was mere weeks after the 9/11 attacks. This weapons platform became known as a Predator ‘drone’ in late 2001 thanks to Bob Woodward. Colloquially, the term ‘drone’ now applies to everything from unmanned military aircraft to quadcopters that fit in the palm of your hand.

The most frequently cited reason for not using the word ‘drone’ to describe everything from racing quadcopters to remote-controlled fixed wing aircraft orbiting a point for hours is linguistic purity. Words have meaning, so the argument goes, and it’s much better to use precise language to describe individual aircraft. A quadcopter is just that — a quadcopter. An autonomous plane used for inspecting pipelines is an unmanned aircraft system.

The argument of linguistic purity fails immediately, as the word ‘drone’ was applied to every conceivable aircraft at some time in history. In the 1960s, a ‘drone’ could mean a spaceship or spy plane. In the 1940s, a ‘drone’ simply meant an aircraft that was indistinguishable in characteristics from a balsa wood, gas powered remote controlled airplane of today. Even accepting the argument of linguistic purity has consequences: ‘drone’ originally meant ‘target drone’, an aircraft flown only for target practice. Sure, keep flying, I’ll go get my 12 gauge.

The argument of linguistic purity fails immediately, as the word ‘drone’ was applied to every conceivable aircraft at some time in history. In the 1960s, a ‘drone’ could mean a spaceship or spy plane. In the 1940s, a ‘drone’ simply meant an aircraft that was indistinguishable in characteristics from a balsa wood, gas powered remote controlled airplane of today. Even accepting the argument of linguistic purity has consequences: ‘drone’ originally meant ‘target drone’, an aircraft flown only for target practice. Sure, keep flying, I’ll go get my 12 gauge.

The argument of not using the word ‘drone’ to apply to what are effectively toys on the basis of language being defined by common parlance fails by tautology. ‘Drone’, critics say, only apply to military aircraft used for spying or raining Hellfires down on the enemy. It’s been this way since 2001, and since language is defined by common usage, the word ‘drone’ should not be applied to a Phantom quadcopter. This argument fails to consider that the word ‘drone’ has been applied to the Phantom since its introduction, and if language is defined by common usage than surely a quadcopter can be called a drone.

Instead of linguistic trickery, I choose to argue for the application of ‘drone’ on a philosophical basis. You are now reading this article on Hackaday, and for the thirty years, a ‘hacker’ is someone who breaks into computer systems, steals money from banks, leaks passwords to the darknet, and other illegal activities. Many other negative appellations apply to these activities; ‘crackers’ are those who simply break stuff, ‘script kiddies’ are responsible for the latest DDOS attack. Overall, though, ‘hackers’ is the collective that causes the most damage, or so the dictionary definition goes.

Obviously, the image of ‘hacking’ being only illegal or immoral is not one we embrace. The word is right there at the top of every page, and every word written here exudes the definition we want. ‘Hacking’, to us, is firmware tomfoolery, and electronic explorations of what should be possible but isn’t available to the public. We own the word ‘hack’ in every word we publish by extolling the virtues of independent study and discovery.

Everyone here learned a very long time ago you don’t impress people with pedantry. You won’t convert anyone from believing hackers stole aunt Mable’s identity to believing ‘hack’ is an inherently neutral term simply by telling them. Be the change you want to see in the world or some other idiotic phrase from a motivational poster, but the point remains. It’s always better to own a term than to insufferably deny it. It’s a lesson we’ve learned over the last decade, and hopefully one the drone community will soon pick up.

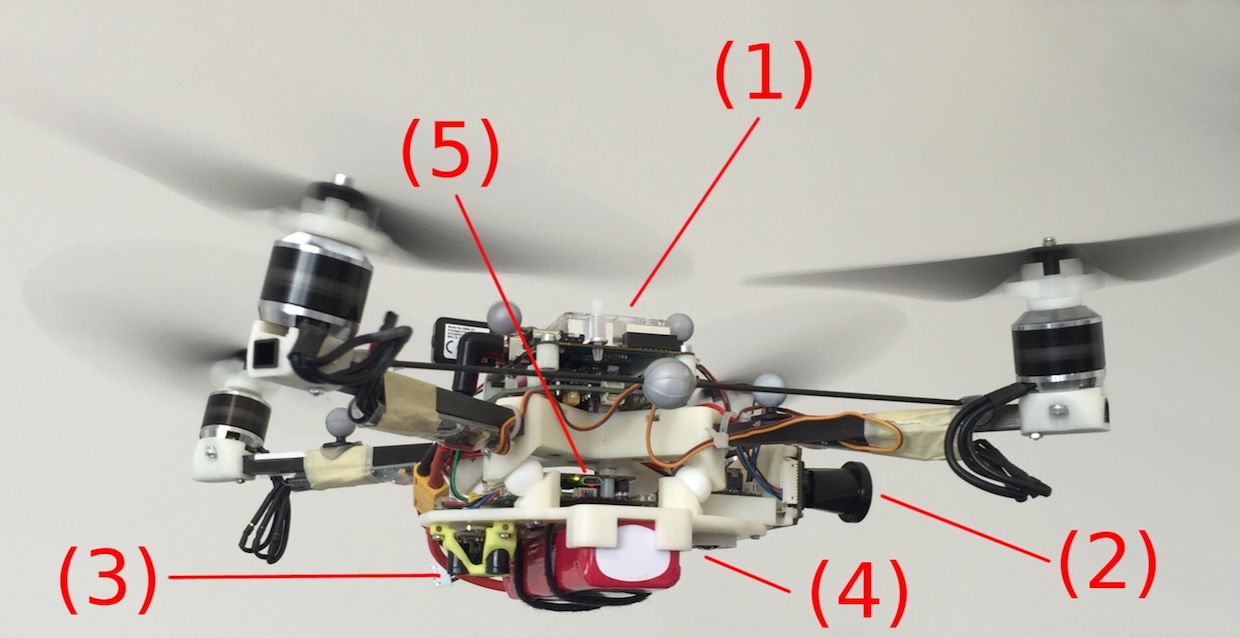

Photo: Robotics and Perception Group at the University of ZurichThe quadrotor platform used in the experiments. (1) Onboard computer. (2) Forward-facing fisheye camera. (3) TeraRanger One distance sensor and (4) downward-facing camera, both used solely during the recovery phase. (5) PX4 autopilot. The motors are tilted by 15º to provide three times more yaw-control action, while only losing 3 percent of the collective thrust.

Photo: Robotics and Perception Group at the University of ZurichThe quadrotor platform used in the experiments. (1) Onboard computer. (2) Forward-facing fisheye camera. (3) TeraRanger One distance sensor and (4) downward-facing camera, both used solely during the recovery phase. (5) PX4 autopilot. The motors are tilted by 15º to provide three times more yaw-control action, while only losing 3 percent of the collective thrust.

Before the word was used to describe aircraft, ‘drone’ had two meanings. First as a continuous low humming sound, and second as a male bee. The male bee does no work, gathers no honey, and only exists for the purpose of impregnating the queen. It’s not hard to see why ‘drone’ is the perfect word to describe a quadcopter — a Phantom is mindless, and sounds like a sack full of bees. Where then did the third definition of ‘drone’ come from, a flying machine without a pilot on board?

Before the word was used to describe aircraft, ‘drone’ had two meanings. First as a continuous low humming sound, and second as a male bee. The male bee does no work, gathers no honey, and only exists for the purpose of impregnating the queen. It’s not hard to see why ‘drone’ is the perfect word to describe a quadcopter — a Phantom is mindless, and sounds like a sack full of bees. Where then did the third definition of ‘drone’ come from, a flying machine without a pilot on board?

A QB-17 drone at Holloman AFB, 1959.

A QB-17 drone at Holloman AFB, 1959.

The argument of linguistic purity fails immediately, as the word ‘drone’ was applied to every conceivable aircraft at some time in history. In the 1960s, a ‘drone’ could mean a spaceship or spy plane. In the 1940s, a ‘drone’ simply meant an aircraft that was indistinguishable in characteristics from a balsa wood, gas powered remote controlled airplane of today. Even accepting the argument of linguistic purity has consequences: ‘drone’ originally meant ‘target drone’, an aircraft flown only for target practice. Sure, keep flying, I’ll go get my 12 gauge.

The argument of linguistic purity fails immediately, as the word ‘drone’ was applied to every conceivable aircraft at some time in history. In the 1960s, a ‘drone’ could mean a spaceship or spy plane. In the 1940s, a ‘drone’ simply meant an aircraft that was indistinguishable in characteristics from a balsa wood, gas powered remote controlled airplane of today. Even accepting the argument of linguistic purity has consequences: ‘drone’ originally meant ‘target drone’, an aircraft flown only for target practice. Sure, keep flying, I’ll go get my 12 gauge.