Originally published in Medium:

Announcing alpha support for the PX4 flight stack in a path towards drones that speak ROS natively.

The drones field is an interesting one to analyze from a robotics perspective. While capable flying robots are reasonably new, RC-hobbyists have been around for a much longer time building flying machines developing communities around the so called flight stacks or software autopilots.

Among these, there’re popular options such as the Paparazzi, the APM (commonly known asardupilot) or the PX4. These autopilots matured to the point of acquiring autonomous capabilities and turning these flying machines into actualdrones. Many of these open source flight stacks provide a general codebase for building basic drone behaviors howevermodifications are generally needed when one has the intention of tackling traditional problems in robotics such as navigation, mapping, obstacle avoidance and so on. These modifications are not straightforward when performed directly in the autopilot code thereby, in an attempt to enhance (or sometimes just simplify) the capabilities of autopilots, abstraction layers such as DroneKit started appearing.

For a roboticist however, the common language is the Robot Operating System (ROS). Getting ROS to talk to these flight stacks natively would require a decent amount of resources and effort thereby, generally, roboticists use a bridge such as the mavros ROS package to talk to the flight stacks.

We at Erle Robotics have been offering services with flying robots using such architecture but we’ve always wondered what would be the path towards a ROS-native drone. In order to explore this possibility we’ve added support for the PX4 Pro flight stack.

Supporting the PX4 Pro flight stack

The PX4 Pro drone autopilot is an open source flight control solution for drones that can “fly anything from a racing to a cargo drone — be it a multi copter, plane or VTOL”. PX4 has been built with a philosophy similar to ROS, composed by different software blocks where each one of these modules communicates using a publish/subscribe architecture (currently, a simplified pub/sub middleware called uORB).

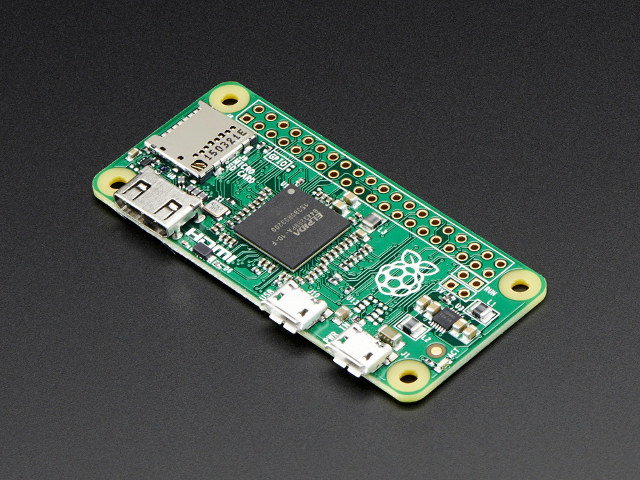

In an internal attempt to research the path of getting ROS-native flight stacks and to open up this work to the community I’m happy to announceofficial alpha support for the PX4 Pro in all our products meant for developers such as the PXFmini, Erle-Brain 2 or Erle-Copter. Our team has put together a new set of Operating System images for our products that will help you switch between flight stacks easily.

To install PX4 Pro, just type the following:

sudo apt-get purge -y apm-* # e.g.: apm-copter-erlebrain

sudo apt-get update

sudo apt-get install px4-erle-robotics

ROS-native flight stacks

Using the PX4 Pro flight stack as a starting point, our team will be dedicating resources to prototype the concept of a drone autopilot that speaks ROS natively, that is, that uses ROS nodes to abstract each submodule within the autopilot’s logic (attitude estimator, position control, navigator, …) and ROS topics/services to communicate with the rest of the blocks within the autopilot.

Ultimately, this initiative should deliver a software autopilot capable of creating a variety of drones that merges nicely with all the traditional ROS interfaces that roboticists have been building for over a decade now.

If you’re interested in participating with this initiative, reach us out.