Killer drone plows helpless drones out of the sky Viewer Discretion Advised

All Posts (14029)

Installation video:

Striver mini VTOL airframe foam bonding (1)

Striver mini VTOL fuselage accessories bonding(2)

Striver mini VTOL empty aircraft tail bonding (3)

Striver mini VTOL wing bonding (4)

Information link:

https://github.com/makeflyeasy/MFE_ArduPlane

Hi, I'm building ArduRover with Skid Steering, The platform are base of DFROBOT Pirate 4WD and using 2 DC Motor L298N

I'm using the firmare for Pixhawk 1 (fmuv3)

4.0.0-FIRMWARE_VERSION_TYPE_OFFICIAL

and according the ardupilot docs for Rover

My config for CH1 and CH 3 are

For “Skid steering” vehicles (like R2D2) these parameters values will need to be set:

- SERVO1_FUNCTION = 73 (Throttle Left)

- SERVO3_FUNCTION = 74 (Throttle Right)

Actualy its depend on your wiring setup at L298N for direction

Here is the schematic of how I wiring it.

The ENA and ENB jumper was remove.

For skid-steering vehicles like the Pirate 4WD from DFROBOT

Set MOT_PWM_TYPE = 3

In my transmitter when throttle is zero the the ch1 push left a quarter, it will rotate the rover to left (left wheel stop - right wheel move) and also the opposite. But if push full it will move fast forward.

If the ch 3 push half more (55%) it will move forward slowly. I'm still setup some parameters for smooth moving.

When acquiring a mapping or surveying drone, the choice is quickly narrowed to a fixed-wing airplane combined with Vertical Takeoff and Landing (VTOL) for its vastly greater range, versatility and ease of use. Within this segment, there are several commercial-grade solutions of European origin. But comparing their capabilities and limitations can be difficult.

The following comparison was made to provide a detailed insight into the characteristics of the leading suppliers in this field. The data has been verified across multiple sources. Several aspects have been calculated to provide a consistent representation of the data. The calculation methods and sources are provided at the bottom of this article.

The platforms chosen for this comparison are:

- The DeltaQuad Pro #MAP by Vertical Technologies

- The WingtraOne by Wingtra

- The Trinity F90+ by Quantum Systems

- The Marlyn by AtmosUAV

- The eBee X by SenseFly

In this article, you will find an abstract of the comparison.

Click here to read the full comparison

Key Features

A quick rundown of the most critical aspects that are relevant to mapping.

- Max flight time is calculated at sea level with camera payload.

- The coverage is calculated by multiplying the maximum flight distance by the maximum camera resolution. It is based on 3CM per pixel with an overlap of 50%.

- To compare pricing a package was selected for each model that most closely resembles: 42MP camera, <1CM PPK, 2 Batteries, Standard radio, GCS (if available).

Maximum surveying area in Hectares

Maximum telemetry range

The maximum range at which the UAV can be controlled. Long-range communications is important for corridor-type surveys such as power lines, pipelines, railways, and roads.

The indicated ranges are the maximum radio range as specified by the supplier. Nominal ranges can be lower.

Maximum image resolution

The maximum image resolution in Megapixels is the total number of pixels that make up a single image. This can be an important factor for a fixed-wing/VTOL UAV.

A higher resolution allows:

- Covering larger areas

- Flying at higher altitudes

- Producing higher resolution end results

- Better post-processing performance with more accuracy

Maximum flight time

The maximum flight time for fixed-wing UAV depends on the altitude above sea level. As the altitude increases, the UAVs need to fly faster due to a lower air density. However, the lower air density also provides less drag, therefore in most cases, the maximum flight distance remains the same at all altitudes.

The indicated maximum flight times are at sea level while carrying a regular camera payload.

Read the full comparison

The full comparison contains detailed technical specifications, pricing details, sources, and methods of calculation.

Click here to read the full comparison

IDIPLOYER MP2.1, an extremely lightweight, feature-packed and affordable drone docking-station. It has been designed and built by idroneimages, based out of Reading, England, to enable fully autonomous drone operations. Integrated with their proprietary contact-charging system and the powerful FlytNow Auto software, the IDIPLOYER MP2.1 is an obvious choice for drone service providers and businesses alike.

With their intensive and iterative efforts, the engineers at idroneimages provide groundbreaking features to the market, including:

- Lightweight enclosure: Weighing just 23 kg (50 lbs), the IDIPLOYER MP2.1 offers increased portability, which eases shipping to anywhere across the world and supports easy installations, on building rooftops and vehicles

- Autonomous contact-based charging: From 15% to 100% battery charge within 50 minutes without employing complex robotics or battery cell modifications, thereby reducing mechanical complexity and increasing reliability

- Precision landing: Computer-vision-aided technology for automatic drone landings with 99.99% accuracy; includes additional rollers for robust performance

- Weatherproof design: Designed based on IP65 standards to withstand harsh environment; comes with thermostatic heating & peltier cooling capabilities for extreme temperature control

The ongoing pandemic has forced businesses to rethink their approach towards deploying automated systems, as they have now become more of a necessity than a mere nice-to-have. With the IDIPLOYER MP2.1, idroneimages presents a turnkey solution to the market that is both affordable and accessible. This widens the scope for businesses to deploy fully automated drones in several areas, such as aerial monitoring, progress tracking, security, and incident response.

Place and Time

Join the FlytBase and idroneimages teams this Friday, 21 May, at 11:00 CST, as they take you through the journey of this feature-rich drone-nest from ideation to production. The event will be live-streamed for worldwide access. Register now at https://flytnow.com/flytlaunch/idiployer/ and stand the chance to win some exciting prizes!

Some local timezones for your quick reference:

- London: 17:00 BST

- San Francisco: 09:00 PDT

- New York: 12:00 EST

- Berlin: 18:00 CEST

- Abu Dhabi: 20:00 GST

- New Delhi: 21:30 IST

How to Register

Registration is free and as simple as a few clicks! Follow this link and sign yourself up. Registrants gain exclusive access to a giveaway for a limited time!

Save your seat for the big day and you could be a part of the FlytNow Preferred Partner program, with access to our wide marketing collateral, rich global network, and strong digital presence!

Reserve your spot here: https://flytnow.com/flytlaunch/idiployer/

About idroneimages

idroneimages was founded in 2018 by a group of like-minded drone enthusiasts who wanted to demonstrate the transformational capabilities of drones in business operations. From safety to detailed inspection imagery, drones have been integrated with industries such as agriculture and wind energy. Today, idroneimages operates some of the most complex and highly functional drones in the market, adapting them to its clients' needs and specific requirements.

Introducing CopterPack - a carbon fiber electric backpack helicopter. First flight video is coming soon!

Lithium ion batteries have a higher energy capacity than Lithium Polymer packs, in general. Higher capacity lithium ion packs can be designed if the current draw of the drones are known. We have built a web utility to find the right chemistry of cells to use, from among the hundreds of different cells.

Input the max weight, and current discharge of the drone and see:

- Battery cell configuration (number of cells in parallel and series)

- Approximate flight time as compared to current aircraft (without weight change)

- Battery weight.

Reach out to us shout@rotoye.com to get free access to this utility.

The high cost of good RC truck kits, diminishing need for such kits & the noise of gearboxes made me look elsewhere for a robot platform. 3D printing a truck from scratch, with only a few metal parts still being off the shelf, was the next step. The only parts which have to be outsourced are the motors, steering servo, & steering knuckles. Everything else is 3D printed or home made electronicals. The size was based on the original Tamiya lunchbox.

The remote control is a single paw 3 channel, with 100mW radio, ball point pen springs, hall effect sensors.

The remote control is charged inductively.

Motors are direct drive Propdrive 4248's of any KV rewound with 20 turns of 26AWG. They don't produce enough torque to go up hills. The motor sensor is a dual hall effect sensor resolver.

The main problem is cooling the motors while getting more torque. Metal motor mounts of the right shape continue to be cost prohibitive & PLA doesn't dissipate heat.

Electronicals automate just enough to drive it with 1 paw, but not so much that it's never finished.

Traction & steering are 2 separate modules. Steering uses a brushless servo with stock lunchbox servo saver.

Motors are powered by L6234's mounted dead bug style to get the heat out.

Tires are printed out of TPU in varying shapes to adjust hardness & traction.

Rear tires have a flat wide shape for more forwards traction. Front tires have a round shape for more sideways traction.

The battery is completely enclosed.

Great post from NASA explaining how the Mars helicopter autopilot works:

----

Before each of Ingenuity’s test flights, we upload instructions that describe precisely what the flight should look like. But when it comes time to fly, the helicopter is on its own and relies on a set of flight control algorithms that we developed here on Earth before Ingenuity was even launched to Mars.

To develop those algorithms, we performed detailed modeling and computer simulation in order to understand how a helicopter would behave in a Martian environment. We followed that up with testing in a massive 25-meter-tall, 7.5-meter-diameter vacuum chamber here at JPL where we replicate the Martian atmosphere. But in all of that work, we could only approximate certain aspects of the environment. Now that Ingenuity is actually flying at Mars, we can begin to assess how things stack up against expectations. Here are some key aspects of the flight control system’s performance on Mars.

Takeoff

Unlike many consumer drones, Ingenuity is not controlled by changing the rotor speeds. Instead, we control our Mars Helicopter in the same manner as full-scale terrestrial helicopters: by changing the pitch angle of the blades, which affects the airfoil “angle of attack” and thereby determines how big a “bite” the blades take out of the air. The bigger the bite, the more lift (and drag) is produced. Like a traditional helicopter, we can change the pitch angle in two ways: by using “collective control,” which changes the blade pitch uniformly over the entire rotation of the blade, and by using “cyclic control,” which pitches the blade up on one side of the vehicle and down on the other.

When Ingenuity takes off, the rotor is already spinning at the setpoint speed of 2,537 rpm. We take off with a sudden increase in collective control on both rotors, which causes the vehicle to “boost” off the ground. During this initial takeoff phase, we limit the control system to respond only to angular rates (how quickly the helicopter rotates or tilts). The reason for this is that we don’t want the control system to be fighting against the ground, possibly resulting in undefined behavior.

The initial takeoff phase lasts for only a split second; once the helicopter has climbed a mere 5 centimeters, the system asserts full control over the helicopter’s position, velocity, and attitude. At this point we’re accelerating toward a vertical climb rate of 1 meter per second.

To estimate our movements during flight, we use a set of sensors that include a laser rangefinder (for measuring altitude) and a camera. We don’t use those sensors until we reach 1 meter altitude out of concern that they might be obscured by dust near the ground. Instead, we initially rely only on an inertial measurement unit (IMU) that measures accelerations and angular rates, and we integrate those measurements to estimate our movements. This is a type of “dead reckoning” navigation – comparable to measuring how far you’ve walked by counting your steps. It’s not very accurate in the long run, but because Ingenuity takes only a couple of seconds to reach 1 meter, we can make it work.

Ingenuity’s rotor power during Flight Two. Credits: NASA/JPL-Caltech. Download image ›

Ingenuity’s rotor power during Flight Two. Credits: NASA/JPL-Caltech. Download image ›

One of the things we were curious about is how “confidently” Ingenuity would boost off the ground and reach that first threshold of 5 cm. Data from the first three flights shows that portion of the climb took about 0.25 seconds, which is very much in line with expectations and indicates that Ingenuity had no issue producing enough thrust on takeoff. During this initial boost, we expected to see a spike in the power required by the rotor system, and that is indeed what we observed. For example, the spike in Flight Two was about 310 watts (W) – well below the maximum capacity of our batteries, which can tolerate spikes as high as 510 W.

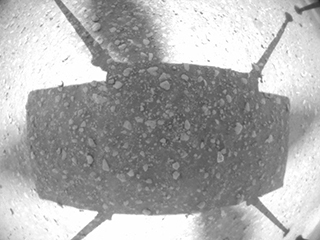

Ingenuity Flight Two: A picture from the navigation camera aboard Ingenuity captured the helicopter on takeoff during Flight Two, showing little sign of dust. Credits: NASA/JPL-Caltech. Download image ›

Ingenuity Flight Two: A picture from the navigation camera aboard Ingenuity captured the helicopter on takeoff during Flight Two, showing little sign of dust. Credits: NASA/JPL-Caltech. Download image ›

After takeoff, Ingenuity took about 2 seconds to reach the 1-meter altitude where it could start using its full suite of sensors. That being said, while we did see some faint dust in the images taken by the Perseverance rover (parked nearby) on takeoff, there was no indication flying dust or sand obscured the altimeter or camera, so our design appears to have erred on the cautious side in this regard (which is a good thing).

The moment the helicopter’s legs leave the ground, its motion starts to become affected by wind. These winds can cause the vehicle to momentarily roll (side to side) or pitch (forward or backward) on takeoff, until it has time to catch and correct itself. We were prepared for some significant roll/pitch angles on takeoff if winds were high at the ground level, but in Ingenuity’s three takeoffs so far, they have been limited to a couple of degrees only, making for nice, vertical takeoffs.

Hover

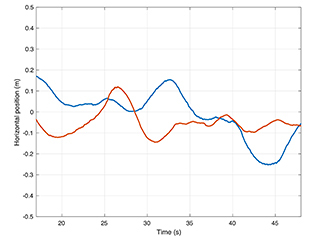

Ingenuity’s horizontal position relative to start during Flight One hover. Credits: NASA/JPL-Caltech. Download image ›

Ingenuity’s horizontal position relative to start during Flight One hover. Credits: NASA/JPL-Caltech. Download image ›

During hover phases of flight, we are attempting to maintain a constant altitude, heading, and position. In evaluating how well we are managing to achieve that, we are forced, for the most part, to rely on Ingenuity’s own estimates of what it was doing, as we have limited data establishing “ground truth.” Those estimates are subject to errors in navigation that will be covered in a separate post. But the steadiness of these estimates tells us a lot about how tightly the controller is able to hold the desired values.

The data shows that we hold our altitude extremely well in hover, to within approximately 1 cm. We also hold the heading (which way we point) to within less than 1.5 degrees. For horizontal position, we’ve seen variations up to approximately 25 cm. Such variations are expected as the result of wind gusts.

So, what has the wind been like during our flights? Fortunately for us, the Perseverance rover carries the MEDA weather station. For Flight One, we have measurements from MEDA indicating winds of 4-6 meters per second from the east and southeast during most of the flight, gusting to 8 meters per second. Keep in mind that those measurements are made 1.5 meters above ground level, and the tendency is for winds to increase as you go from ground level up. We also have atmospheric density measurements at the time of Flight One, showing 0.0165 kilograms per cubic meter, or about 1.3% of Earth’s density at sea level. Using this information, we can assess the system’s performance in another important respect – namely, the control effort required to fly.

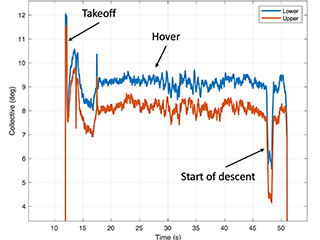

Ingenuity’s collective control during Flight One. Credits: NASA/JPL-Caltech. Download image ›

Ingenuity’s collective control during Flight One. Credits: NASA/JPL-Caltech. Download image ›

For the collective control (remember, that is the one that changes rotor blade pitch angle uniformly to affect helicopter’s thrust), we would like to see hover values roughly consistent with prior expectations. During Flight One, we hovered with around 9.2 degrees collective on the lower rotor and 8.2-degree collective on the upper (that’s the angle of the blade’s “chord line” – an imaginary line drawn from the leading edge to the trailing edge of the rotor blade – at ¾ of the rotor radius). Those values are 0.7-0.8 degrees lower than the trim values we anticipated (9.0 degree on the upper rotor and 9.9 degree on the lower rotor). But those trim values were tuned based on tests without wind at a somewhat different density/rotor speed combination, so this difference is not unexpected. Another indication that we are within our aerodynamic comfort zone is the electrical rotor power of around 210 W in hover, which is also right in the vicinity of what was expected. Taken together, the results indicate that we have good margin against “aerodynamic stall,” which is when the blade airfoil’s angle relative to the surrounding airflow is increased beyond the point where it can produce further increases in lift.

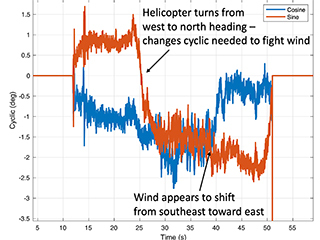

Ingenuity’s lower cyclic control on Flight One. Similar cyclic controls applied on the upper rotor. Credits: NASA/JPL-Caltech. Download image ›

Ingenuity’s lower cyclic control on Flight One. Similar cyclic controls applied on the upper rotor. Credits: NASA/JPL-Caltech. Download image ›

We also evaluate the cyclic control, which is used to create roll and pitch moments on the vehicle. We have seen relatively steady values in hover, generally of magnitude less than 3 degrees, which leaves ample margin against the upper limit of 10 degrees. The cyclic control inputs tell us a fair amount about the wind that the vehicle has to fight against. For example, for Flight One the cyclic control is consistent with winds from the east and southeast, which is in alignment with MEDA observations. The cyclic control effort also increases with altitude, which indicates that winds are getting higher further from the ground.

Landing

Landing is a particularly challenging part of any flight. Ingenuity lands by flying directly toward the ground and detecting when touchdown happens, but a number of events occur in rapid succession leading to touchdown. First, a steady descent rate of 1 meter per second is established. Then, once the vehicle estimates that the legs are within 1 meter of the ground, the algorithms stop using the navigation camera and altimeter for estimation, relying on the IMU in the same way as on takeoff. As with takeoff, this avoids dust obscuration, but it also serves another purpose -- by relying only on the IMU, we expect to have a very smooth and continuous estimate of our vertical velocity, which is important in order to avoid detecting touchdown prematurely.

About half a second after the switch to IMU-only, when the legs are estimated to be within 0.5 meters of the ground, the touchdown detection is armed. Ingenuity will now consider touchdown to have occurred as soon as the descent velocity drops by 25 centimeters per second or more. Once Ingenuity meets the ground, that drop in descent velocity happens rapidly. At that point, the flight control system stops trying to control the motion of the helicopter and commands the collective control to the lowest possible blade pitch in order to produce close to zero thrust. The system then waits 3 seconds to ensure the helicopter has settled on the ground before spinning down the rotors.

People have asked why we contact the ground at the relatively high speed of 1 meter per second. There are multiple reasons for this. First, it reduces the dead-reckoning time that we need to spend without using the camera and altimeter; second, it reduces the time spent in “ground effect,” where the vehicle dynamics are less well-characterized; and third, it makes it easier to detect that we’ve touched down (because the velocity change is clearly sufficient for detection). What makes this strategy possible is the landing gear design which helps prevent the vehicle from bouncing on landing.

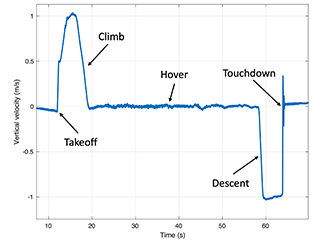

Ingenuity’s estimate of vertical velocity during Flight Two. Credits: NASA/JPL-Caltech. Download image ›

Ingenuity’s estimate of vertical velocity during Flight Two. Credits: NASA/JPL-Caltech. Download image ›

Any touchdown detection algorithm of this kind has to strike a balance between two potential pitfalls: (1) detecting touchdown too early (thereby dropping to the ground from the air) and (2) not detecting touchdown soon enough (which would cause the helicopter to keep trying to fly after coming in contact with the ground). Data from Ingenuity’s flights on Mars show that we were not in danger of either of these scenarios. During descent, Ingenuity has maintained its vertical velocity to within approximately 4 cm per second, and it has detected the necessary 25 cm per second drop within approximately 30 milliseconds of touchdown.

As we continue with our flights on Mars, we will keep digging deeper into the data to understand the various subtleties that may exist and would be useful in the design of future aerial explorers. But what we can already say is: Ingenuity has met or exceeded our flight performance expectations.

From Applied Aeronautics:

Albatross UAV made its first appearance in 2013 on DIY Drones. The Albatross UAV Project, as it was titled, set out to design a composite UAV that met a long list of precise and ambitious performance metrics. Those were: > 6KG MTOW, plenty of room for sensors and batteries, up to 4 hours of flight time, wide flight envelope, and last but not least, efficiency. Over the span of 13 months, the Albatross began to take shape through meticulous design and testing. To date, our company has Albatross systems flying in over 50 countries and on every continent. Still, it’s important to us to always remember our roots as a company spurred by the passion to create something truly incredible from the ground up.

AIRLink stands for Artificial Intelligence & Remote Link. The unit includes cutting-edge drone autopilot, AI mission computer and LTE connectivity unit. Start your enterprise drone operations with AIRLink and reduce the time to market from years and months down to a few weeks.

More info: https://sky-drones.com/airlink

Sky-Drones Technologies hit their 10-year anniversary this year, so to celebrate the occasion the company decided to prolong the launch of their latest addition to the product range. This has been an entire year in the making, excitement has built up, and expectations have been implied, but the team have absolutely not disappointed!

As drones become more and more hardware-defined and software-orientated, Sky-Drones Technologies must be at the forefront of innovation. With that in mind, the company presents UAV industry specialists and enthusiasts alike: AIRLink. The artificial intelligence and remote link unit that is installed to your enterprise UAV to provide:

- Cutting edge autopilot: manual and fully autonomous flight capabilities for multirotors, fixed wings and VTOLs

- AI Mission Computer: onboard data processing and computer vision

- LTE Connectivity: BVLOS flights, Cloud connectivity and remote workflows

What these exceptional elements mean for the UAS industry is the start of a whole new era in UAV flight control, data analytics, and safety. Never has anyone created a product that can withstand such extreme ambient temperatures and high computational power without an overheating issue, a product that has entirely integrated hardware and software for flight and payload data analytics, a product that can be so many products in one or one product with so many cutting-edge capabilities... Until now.

To give an overview, AIRLink comes with a FPV HDR 1080p camera to provide users with a video stream that has exceptional image quality. The unit is constantly connected to the internet, has been designed with critical peripherals as an essential element for user convenience, and has built-in LTE and Wi-Fi.

The goal with AIRLink is to integrate this all-in-one unit into the UAV designs during the manufacturing stages so as to focus the efforts and resources of the drone itself rather than avionics and connectivity. With that in mind, Sky-Drones offers a variety of options when choosing how to use AIRLink to the best of its ability:

- AIRLink OEM – purchase AIRLink as Sky-Drones designed it. Includes all the features and aviation-grade aluminium casing with the heatsink. The process includes simply installing the unit to your UAV.

- AIRLink Enterprise – Sky-Drones will provide the internal electronics of the AIRLink units, excluding the casing to reduce the size of the units and integrate them directly into your UAV during the manufacturing process.

- Reference Design – build AIRLink yourself in-house! Sky-Drones will provide all the relevant instructions, manufacturing material, and will be on hand for engineering support whilst you set up your operation for manufacturing your own AIRLink units.

From Hackaday:

Sometimes bad software is all that is holding good hardware back. [Michael Melchior] wanted to scavenge some motors and propellers for another project, so he bought an inexpensive quadcopter intending to use it for parts. [Michael] was so surprised at the quality of the hardware contained in his $100 drone that he decided to reverse engineer his quadcopter and give the autopilot firmware a serious upgrade.

Upon stripping the drone down, [Michael] found that it came with a flight management unit based on the STM32F405RG, an Inertial Measurement Unit, magnetic compass, barometric pressure sensor, GPS, WiFi radio, camera with tilt, optical flow sensor, and ultrasonic distance sensor, plus batteries and charger! The flight management unit also had unpopulated headers for SWD, and—although the manufacturer’s firmware was protected from reading—write protection hadn’t been enabled, so [Michael] was free to flash his own firmware.

Upon stripping the drone down, [Michael] found that it came with a flight management unit based on the STM32F405RG, an Inertial Measurement Unit, magnetic compass, barometric pressure sensor, GPS, WiFi radio, camera with tilt, optical flow sensor, and ultrasonic distance sensor, plus batteries and charger! The flight management unit also had unpopulated headers for SWD, and—although the manufacturer’s firmware was protected from reading—write protection hadn’t been enabled, so [Michael] was free to flash his own firmware.

We highly recommend you take a look at [Michael]’s 10 part tour de force of reverse engineering which includes a man-in-the-middle attack with a Raspberry Pi to work out its WiFi communication, porting the open-source autopilot PX4 to the new airframe, and deciphering unknown serial protocols. There are even amusing shenanigans like putting batteries in the oven and freezer to help figure out which registers are used as temperature sensors. He achieves liftoff at the end, and we can’t wait to see what else he’s able to make it do in the future.

Of course, [Michael] is no stranger to hacking imported quadcopters, and if you’re interested in PX4 but want something quieter than a quadcopter, take a look at this autopilot-equipped glider.

From Robohub:

For my PhD, I’m studying how global problems such as wildfires and aid delivery in remote areas can benefit from innovative technologies such as UAV (unmanned aerial vehicle) swarms.

Every year, vast areas of forests are destroyed due to wildfires. Wildfires occur more frequently as climate change induces extreme weather conditions. As a result, wildfires are often larger and more intense. Over the past 5 years, countries around the globe witnessed unprecedented effects of wildfires. Greece has seen the deadliest wildfire incident in its modern history, Australia witnessed 18,636,079 hectares being burnt and British Columbia faced wildfire incidents that burnt 1,351,314 hectares.

Rather than jump straight in to the design of swarm algorithms for UAVs in these scenarios, I spent a year speaking with firefighters around the world to ask about their current operations and how UAVs could help. This technique is called mutual shaping where end users cooperate with developers to co-create a system. It’s been interesting to see the challenges they face, their openness to drones and their ideas on the operation of a swarm. Firefighters face numerous challenges in their operations, their work is dangerous and they need to ensure the safety of citizens and themselves. Unfortunately, they often don’t have enough information about the environment they are deploying in. The good news is that UAVs are already used by firefighters to retrieve information during their operations, usually during very short human-piloted flights with small off-the-shelf drones. Having larger drones, with longer autonomy and higher payload, could provide high-value information to firefighters or actively identify and extinguish newly developed fire fronts.

I think one of the reasons we don’t have these swarms in these applications is that 1) we didn’t have the hardware capabilities (N robots with high-payload at a reasonable cost) , 2) we don’t have the algorithms that allow for effective swarm deployments at the scale of a country (necessary to monitor for forest fires), and 3) we can’t easily change our swarm strategies on the go based on what is happening in reality. That’s where digital twins come in. A digital twin is the real-time virtual representation of the world we can use to iterate solutions. The twin can be simplistic in 2D or a more realistic 3D representation, with data from the real-world continuously updating the model.

To develop this framework for digital twins we’re starting a new project with Windracers ltd, Distributed Avionics ltd, and the University of Bristol co-funded by Innovate UK. Windracers ltd. has developed a novel UAV: the ULTRA platform, that can transport 100kg of payload over a 1000km range. Distributed Avionics specialises in high-reliability flight control solutions, and Hauert Lab, which engineers swarm systems across scales – from huge number of tiny nanoparticles for cancer treatment to robots for logistics.

In the future, we aim to use the same concepts to enable aid delivery using UAV swarms. The 2020 Global Report on Food Crises states that more than 135 million people across 53 countries require urgent food, nutrition, and livelihoods assistance. Aerial delivery of such aid could help access remote communities and avoid in-person transmission of highly infectious diseases such as COVID-19.

By the end of this project, we are hoping to demonstrate the deployment of 5 real UAVs that will be able to interact with a simple digital twin. We also want to test live deployment of the control swarm system on the UAVs.

Are you a firefighter, someone involved in aid delivery, do you have other ideas for us. We’d love to hear from you.

Endangered Snow Leopards and their habitat are under threat, KwF to use drones to protect these elusive cats of the Himalayas

For more than a decade, Kashmir World Foundation (KwF) has been operating aircraft around the world protecting endangered cats, rhinos, sea turtles, and many other species. We integrate airframes with power and propulsion systems to carry sensors, high performance computers, and special components needed to perform our missions. Operating on battery or liquid fuel, our aircraft meet most of our mission needs.

But the mission that drove us to create KwF -- protecting snow leopards and other endangered species in the Himalayas -- eluded us with demands on flight performance that could not be met by any existing aircraft. To support this mission, the aircraft must operate at very high altitude while close to ground below and to the sides as they navigate through extremely rocky terrain while encountering high and highly variable winds with snow, ice, and freezing rain. Remote from any conceivable operating base, and with needs to patrol over tens of thousands of square kilometers, the aircraft must be able to stay on station for at least 8 hours processing data, making decisions, and taking swift action when needed, all while barely making a sound.

Background

Fifty million years ago, Eurasia and current day India collided to create the tallest mountain range in the world -- the Himalayas. Lifted by the subduction of the Indian tectonic plate under the Eurasian Plate, the Himalayan mountain range runs west-northwest to east-southeast in an arc 2,400 km (1,500 mi) long. Standing at 8,848 meters (29,031.7 ft), nestled between Nepal, Tibet, and China, Mount Everest is the tallest mountain peak in the world. Glaciers and fast flowing water have cut the mountains creating jagged peaks and valleys through which winds blow with rapidly varying direction.

A map of mountain ranges in snow leopard habitat, with the sites of published population studies marked. Image taken from Suryawanshi et al, 2019.

Masters of disguise, the snow leopards (PANTHERA UNCIA) evolved about 3.9 million years ago from Miacids, a common ancestor of tigers, to become king of the Himalayas. These elusive cats live above the high alpine areas where they hunt the blue sheep, Argali wild sheep, ibex, marmots, pikas, deer and other small mammals.

The global snow leopard population remains unknown, but based on their research, Suryawanshi and his colleagues fear it may be lower than prevailing guesstimates suggest.

Photo by Shan Shui / Panthera / Snow Leopard Trust

Having thrived under some of the harshest conditions on Earth, snow leopard populations are now in rapid decline and will be gone soon unless action is taken to ensure their protection. Despite being listed as endangered since 1972, and legally protected, humans hunt and poison them for trophies, fur and body parts; hunt their prey; and destroy their habitat through over grazing of domestic animals. Researchers aren’t sure how many snow leopards are left in the world. In 2016, IUCN estimated that there are between 2,710 and 3,386 snow leopards found in the high mountains of Central and South Asia.

Problem

Protecting snow leopards and other endangered species in the Himalayas requires surveillance over broad areas. To meet these stressing needs, Kashmir-Robotics, the Science and Technology division of KwF, proposed using aerial robotics enabled with artificial intelligence to provide self awareness, situational awareness, and the ability to act quickly and decisively.

In collaboration with the Technology Assisted Counter Poaching (TACP) network, Dr. Ronald Pandolfi, Chief Technology Officer for KwF and Dr. Muhammad Ali Nawaz, Executive Director of Snow Leopard Foundation in Pakistan derived a set of operational requirements for an unmanned aerial system (UAS) to perform the mission. Dr. Ronald Pandolfi concluded, “the aircraft must be able to launch from low altitude, climb to over 5,000 meters while traversing to snow leopard habitat, then stay on station for at least 8 hours while navigating between rocky slopes and deep valleys, all while exposed to high and variable winds, snow, and ice.” Hence, Eagle Ray was born.

Baseline

With no current aircraft able to meet these requirements, Princess Aliyah Pandolfi, Executive Director of KwF turned to Chief Scientist & Vice President of DZYNE Technologies, Mark Page, world leading designer of high performance unmanned aerial systems and creator of the modern blended wing body (BWB) aircraft. She asked him to design a planform and airfoil stack for an aircraft with gross takeoff mass less than 25 kg (to comply with Federal Aviation Administration regulations) that is efficient and agile while able to operate in high and variable winds under some of the most extreme weather conditions.

Rooted in a long history of successful BWB designs, Page provided a Baseline adjusted for the flight characteristics required for the KwF missions. Page says, “Blended Wings like Eagle Ray are well suited to flight at extreme altitudes. First, high altitude flight is much easier with an electric airplane since the powerplant doesn’t rely on oxygen from the atmosphere to generate power. At high altitudes the oxygen density is about half that available at Sea Level. A gas-powered plane would lose half its horsepower, while an electric plane loses none. Second to maintain efficient flight in the thinner air the true flight speed must increase about 40%. The power required to fly is the product of flight speed times aerodynamic drag. Eagle Ray will need to fly faster like any other plane, so the only question is this, “which plane makes the least drag?”. Efficiency is the gift that keeps on giving, and the BWB produces about 30% less drag to bear the airplane’s weight.”

Nepal Collaboration

“At KwF, we look to the world for good ideas and great engineering,” Princess Aliyah says, “Our mission is to save endangered species from extinction, so we have reached out in the broadest sense for innovation and invention to meet our demanding mission capabilities.”

Dr. Ronald Pandolfi, Princess Aliyah, and Kapil Regmi share their signed copies of an MoU between KwF & acem

that will inspire students in Nepal to collaborate internationally to help protect the environment

and endangered species through the use of innovative drones and artificial intelligence.

KwF’s partnership with Advanced College of Engineering & Management (acem) in Nepal will give Eagle Ray the platform to test at 18,000 feet and students to participate in an international design challenge. Kapil Dev Regmi, the Executive Director of acem is excited for the collaboration because “this state-of-the-art Eagle Ray Project is going to inspire students at so many different levels. It will motivate students to keep dreaming and prove that the true dreamers are the true achievers. Eagle Ray project is not just another project being carried out solely by an international organization but the project which collaborated with a local partner (like acem) to involve students right from the project development phase to ensure the end to end project overview. While most drone projects are being done just at local level (mostly on medical deliveries to rural areas), Eagle Ray project will open new dimensions of exploration like using tech to understand biodiversity and help to conserve them. This will showcase the idea of *innovation* to students. It is a project which is way ahead of time for Nepali students that will excite them to innovate for local problems.”

Global Challenge

Just a few years ago, only large aerospace corporations could design advanced performance aircraft. Now with global communications resources like DIY DRONES and open source tools like OpenVSP, individuals and small treams from around the world can contribute with design and breakthrough technologies.

KwF now challenges students, academics, and professionals around the world to improve on the Mark Page BWB Eagle Ray design, and in so doing help KwF create an aircraft that is better suited to the mission of protecting endangered species throughout the Himalayas. More specially, the challenge is to improve on the planform and airfoil stack, increasing endurance while maintaining stability and maneuverability. All challenge participants receive access to the current and final design, and those who succeed in improving Eagle Ray have their name appear on the official list of contributors and their logo emblazoned on every Eagle Ray aircraft.

Challenge Teams can attempt to make improvement to the overall planform and airfoil stack, or they can concentrate on special features including elevons, trim tabs, tip rudders, or other control surfaces. Each proposed change must include a statement of why the change is suggested and how it is expected to improve performance and enable greater mission capability along with calculations, simulations and/or charts.

The Eagle Ray Design Challenge Opens April 15, 2021! Join the challenge to help protect endangered snow leopards. Details of the challenge criteria and rules of engagement will be available at www.kashmirworldfoundation.org or contact: info@kashmirworldfoundation.org

Introduction

It can be difficult to get useful data from a magnetometer. It can be especially difficult if the data is used to estimate the yaw attitude of the vehicle. For example, the sensor may indicate the proper direction for certain attitudes, but a wrong direction for other attitudes. Or, the yaw estimate may be accurate one day and off the next.

In order for magnetometer data to yield the yaw attitude of a vehicle, the magnetometer must measure the direction of the geomagnetic field (i.e. the Earth's magnetic field). The geomagnetic field points north (more or less) and has been used for navigation for hundreds of years. The challenge is that the geomagnetic field is relatively weak. It is common for the geomagnetic field to be distorted or obscured by extraneous magnetic sources in the vicinity of the magnetometer. The purpose of magnetometer calibration is to extract an observation of the geomagnetic field vector from the sensor’s output, which is corrupted by various errors.

Over the last several years, I have tinkered with the AK8963 magnetometer, which is part of the MPU-9250 IMU. Through trial-and-error, I eventually arrived at a dependable calibration procedure. The calibration procedure is repeatable and produces sufficiently accurate estimates of the yaw attitude. It is typical for the yaw attitude to have less than 2 degrees of error, provided the aircraft is in the wings-level configuration. Because of this work, the magnetometer now serves as the primary yaw attitude sensor within our autopilot system.

In this article, I want to share four lessons I learned while integrating the magnetometer into an autopilot system. These lessons are probably familiar to those who work with magnetometers. However, for those who are new to magnetometers, this article may alert you to some common pitfalls. The four lessons are:

- Artificial errors impose special considerations on magnetometer calibration.

- Autocalibration methods have a fundamental flaw.

- Keep it simple with the compass swinging method.

- The throttle command can be related to a magnetometer bias.

In the remainder of this article, I will expand upon each of the previous points. For a more mathematical treatment of the proposed calibration procedure, the reader is referred to the attached PDF.

Artificial errors impose special considerations on magnetometer calibration

Magnetometers are subject to two types of errors: instrument errors and artificial errors. Instrument errors are associated with the sensor itself. Instrument errors are less noticeable in high-grade sensors than low-grade sensors. Artificial errors are extraneous magnetic sources that compete with the geomagnetic field. Artificial errors are associated with the environment. For this reason, you should calibrate the magnetometer in an environment that is similar to the sensor's operative environment. Practically, this means calibrating the sensor in its final configuration on the vehicle. This also means calibrating the sensor outdoors, away from buildings and power lines, which may distort the geomagnetic field. While it may be more convenient to calibrate an isolated magnetometer on a workbench, the calibration would likely become obsolete as soon as the magnetometer is mounted on the vehicle.

It is worth emphasizing that artificial errors are not associated with the sensor itself. Consequently, you cannot fix artificial errors by getting a better sensor. You fix artificial errors by (i) shielding/isolating the extraneous sources or (ii) removing the effects of the artificial errors from the data. In this work, we take the latter approach.

That said, the choice of magnetometer is still important. The full-scale range (FSR) of the sensor is particularly important. You don't want a sensor with a FSR that is orders-of-magnitude greater than the magnitude of the quantity of interest. This is because range and resolution are competing factors: what you gain in range you lose in resolution. For our application (vehicle state estimation), the quantity of interest is the geomagnetic field, the magnitude of which is about 0.5 Gauss. The AK8963 is a poor choice for our application because its FSR is 50 Gauss, which is 100x greater than the quantity of interest!

Autocalibration methods have a fundamental flaw

An autocalibration method determines the calibration parameters solely from magnetometer measurements. This feature makes it easy to collect the calibration data. Undoubtedly, the ease of data collection contributes to the popularity of a particular autocalibration method, the ellipsoid-fitting method. However, it can be shown that autocalibration methods are unable to correct for misalignment between the magnetometer and other inertial sensors [1]. Furthermore, it can be shown that misalignment is detrimental to attitude estimation [2]. In order to correct for magnetometer misalignment, additional sensors must be used. The theoretical flaw with autocalibration methods has real-world ramifications. In my experience, I have yet to see the ellipsoid-fitting method produce satisfactory estimates of the yaw attitude.

Keep it simple with the compass swinging method

Alternatives to the ellipsoid-fitting method include the dot-product invariance (DPI) method [3] and the compass swinging method. These alternative methods correct for misalignment by assimilating data from an additional sensor. The DPI method assimilates accelerometer data. The swinging method assimilates data from an "imaginary" magnetometer. We obtain data from the "imaginary" magnetometer by deducing the components of the geomagnetic field based on the orientation of the vehicle with respect to a compass rose. The mathematical details for all three calibration methods are included in the attached PDF.

The calibration procedure that I recommend applies the DPI method and the compass swinging method in succession. The DPI method is used to obtain a crude 3D calibration. The swinging method is used to enhance the measurement accuracy in the wings-level position. Of course, the calibration accuracy will decrease as the vehicle departs from the wings-level position. However, it is reasonable to assume the vehicle will remain close to the wings-level position during constant-altitude operations. Furthermore, deviations from the wings-level position are bounded due to roll and pitch constraints.

Ideally, the magnetometer would be well-calibrated after applying the DPI method. In practice, however, the estimated yaw angle can have up to 10 degrees of error. The error is linked to the off-diagonal elements of the matrix that appears in the inverse error model. The off-diagonal elements are difficult to observe using the DPI method. That is, the off-diagonal elements vary from run to run. For this reason, the swinging method is needed as an additional calibration step.

The throttle command can be related to a magnetometer bias

An electrical current will generate a magnetic field according to the Biot-Savart law (see this HyperPhysics article). A large current close to the magnetometer will bias the sensor and alter the estimated yaw attitude. A common source of such a current is the current drawn by the electric powertrain of the aircraft. The current-induced bias can be canceled by subtracted an estimated bias from the magnetometer readings. The estimated bias is proportional to the current. The proportionality constants can be estimated from system identification tests.

Of course, the previous solution requires a current sensor on the powertrain. The current sensor may be avoided by recognizing that the current is related to the throttle command. Using physical models of the UAV's powertrain (see the figure above), we can show that the current-induced bias is proportional to the square of the throttle command [4]. The figure below plots the magnetometer bias versus throttle command. The variable h3 denotes the component of the current-induced magnetometer bias along the z axis of the sensor. Overlaying the data is the quadratic model predicted by the powertrain analysis.

Conclusion

In conclusion, this article offers four insights on magnetometer calibration. First, we show that artificial errors impose special considerations on magnetometer calibration. Second, we caution the reader about autocalibration methods, such as the ellipsoid-fitting method. Third, we propose a calibration procedure that combines the DPI method and the swinging method. Finally, we propose a quadratic model for throttle-induced magnetometer biases.

magnetometer_calibration_procedure.pdf

References

[1] J. L. Crassidis, K.-L. Lai, and R. R. Harman, “Real-time attitude-independent three-axis magnetometer calibration,” J. Guid., Control Dyn., vol. 28, no. 1, pp. 115–120, 2005.

[2] D. Gebre-Egziabher, “Magnetometer autocalibration leveraging measurement locus constraints,” J. Aircr., vol. 44, no. 4, pp. 1361–1368, Jul. 2007.

[3] X. Li and Z. Li, “A new calibration method for tri-axial field sensors in strap-down navigation systems,” Meas. Sci. Technol., vol. 23, no. 10, pp. 105105-1–105105-6, 2012.

[4] M. Silic and K. Mohseni, “Correcting current-induced magnetometer errors on UAVs: An online model-based approach,” IEEE Sens. J., vol. 20, no. 2, pp. 1067–1076, 2020.

Olá pessoal,

Estou em busca de pessoas interessadas em fazer parte de uma equipe de uma StartUp da área de drones recem aprovada em edital nacional no Brasil.

Estamos precisando de pessoas com conhecimento em desenvolvimento de hardware e software de drones ou conhecimentos em processamento de imagens.

Estamos na fase de prototipação de produtos e serviços. É necessário também conhecimento do idioma português, pois todo o processo de mentoria será realizado neste idioma.

Interessados entrar em contato comigo até o dia 03/04/21 por aqui ou pelo email mesquita.geison@gmail.com.

Obrigado!!!

#########################################################################

Hey guys,

I am looking for people interested in being part of a team of a Drone StartUp recently approved in national competition in Brazil.

We are looking for people with knowledge in the development of hardware and software for drones or knowledge in image processing.

We are in the prototyping phase of products and services. Knowledge of the Portuguese language is also necessary, as the entire mentoring process will be carried out in this language.

Interested to contact me until 04/04/21 from here or by email mesquita.geison@gmail.com

Thanks!!!