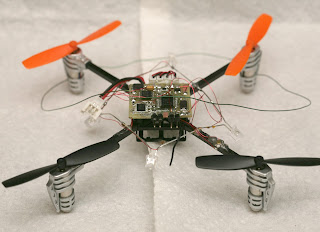

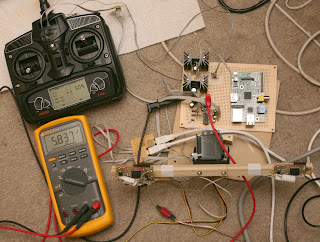

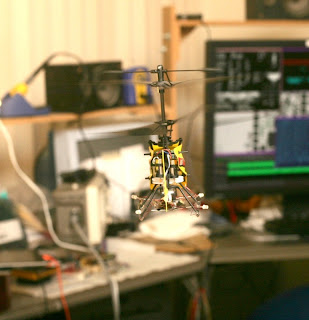

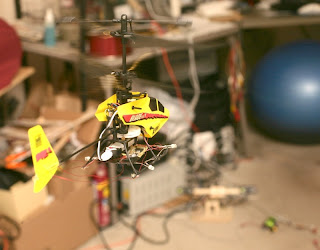

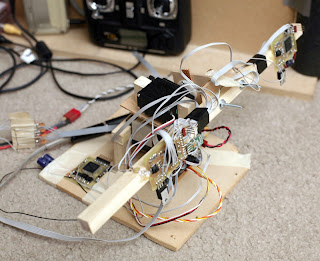

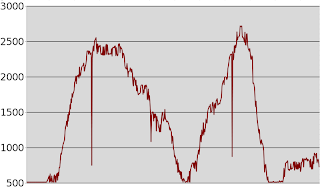

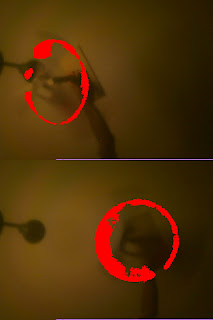

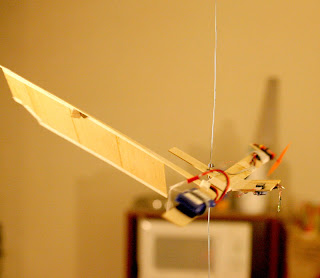

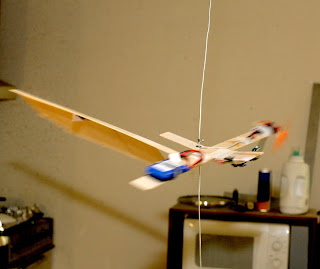

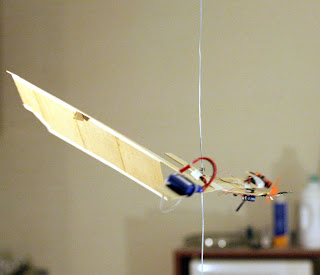

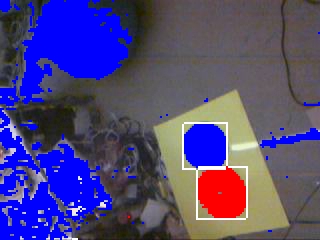

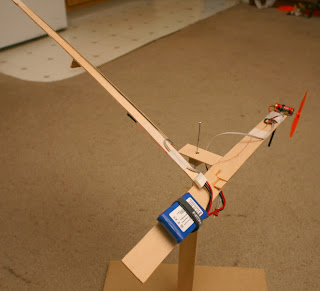

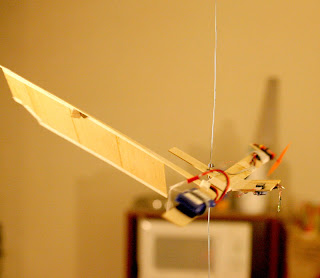

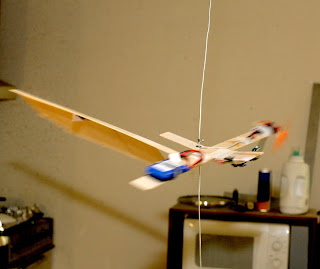

So the 1st flight with some vision hits happened.

Blue becomes visible 1st.

Frame is missed, for no reason.

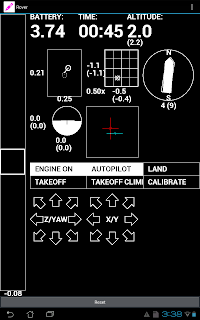

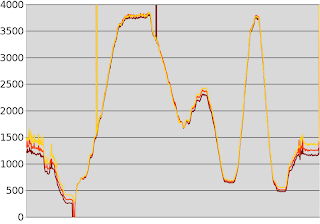

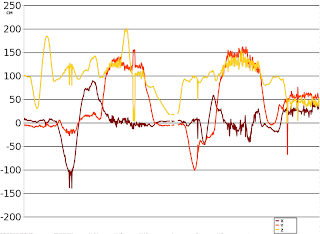

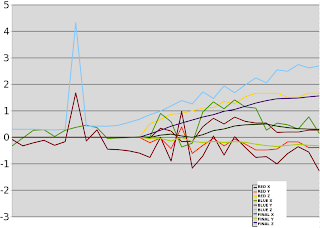

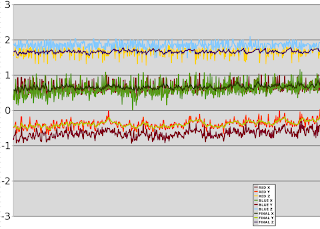

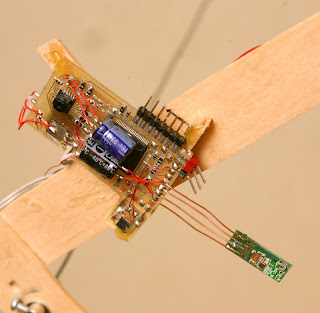

The few position data points seemed where they should be, but position sensing wasn't fast enough & she flew right into the ceiling. Not until the last 4 data points did the autopilot detect a finished takeoff climb.

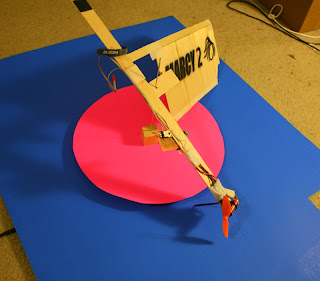

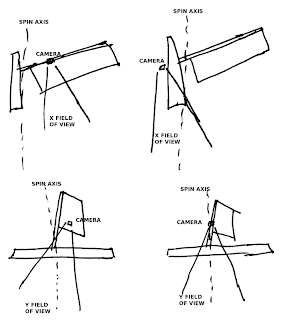

The general idea is the camera needs a complete view of the axis of rotation, so it can have continuous coverage of the red blob during takeoff. If it can't do that, it needs to get position from a partially obscured red blob, so it can have more data points. But if it points straight down, it can't detect tilt during takeoff.

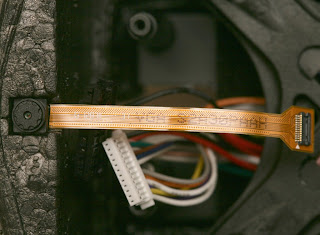

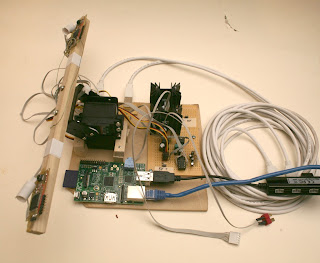

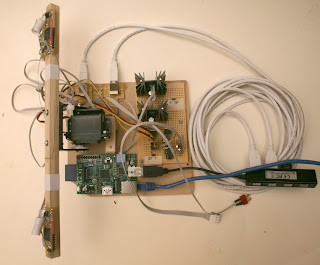

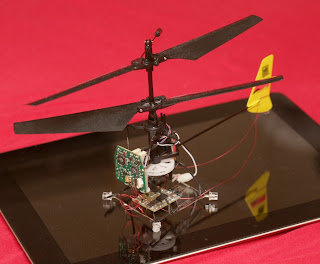

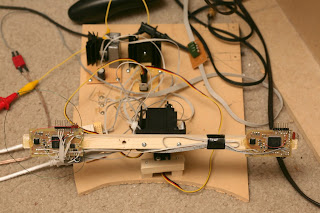

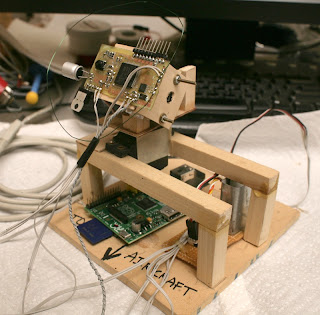

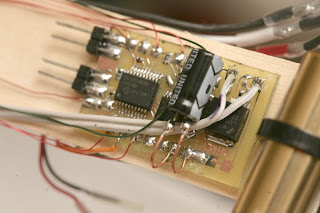

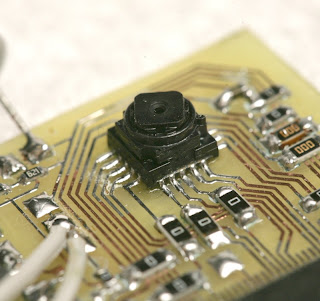

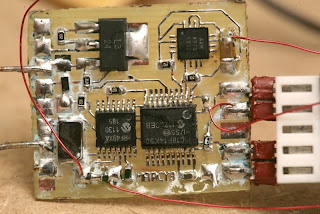

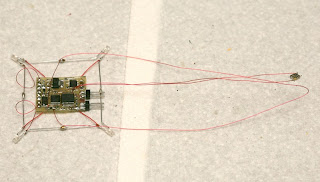

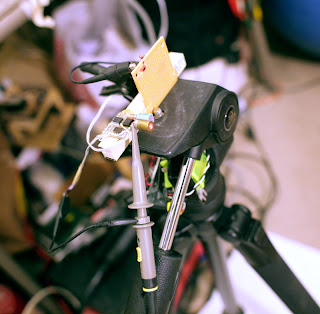

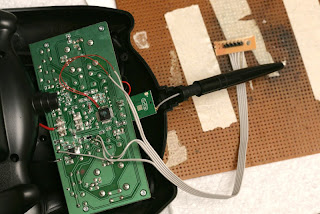

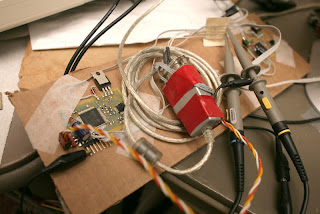

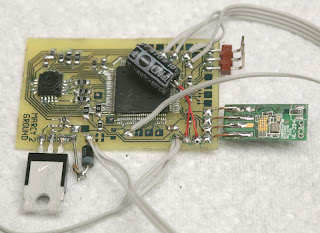

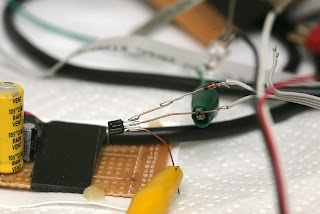

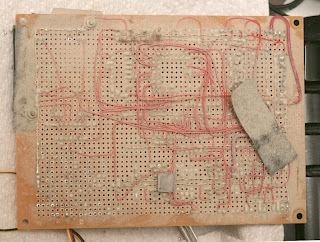

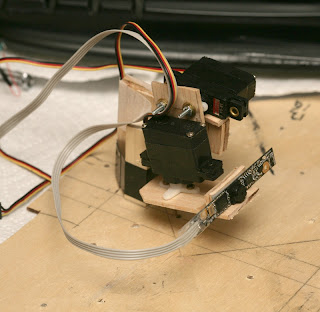

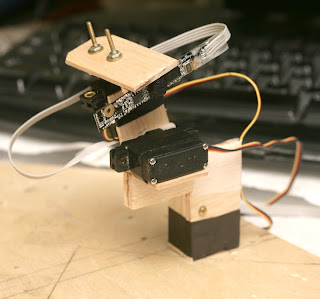

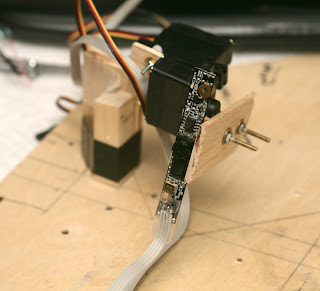

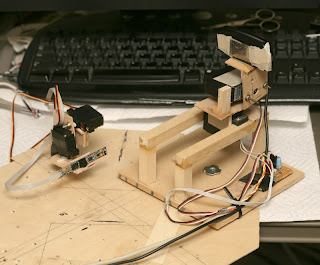

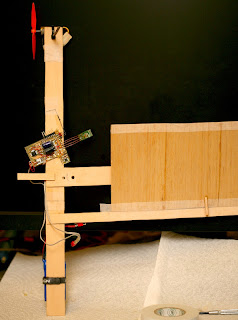

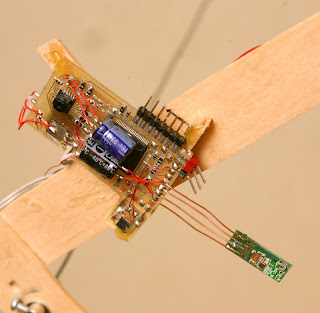

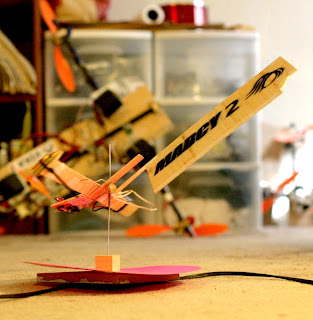

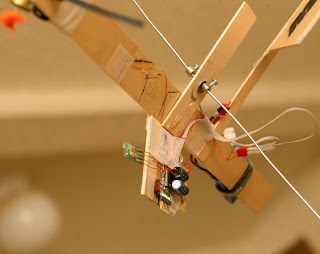

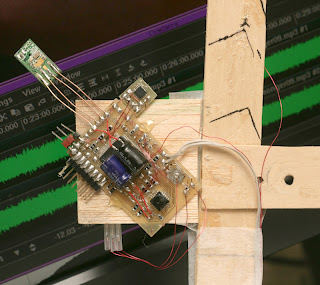

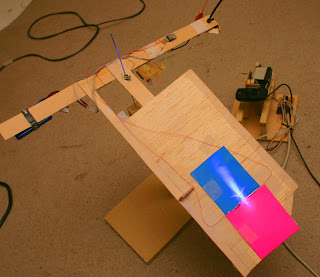

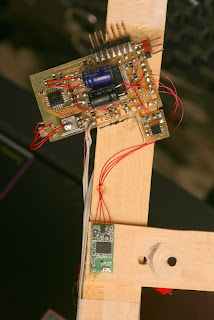

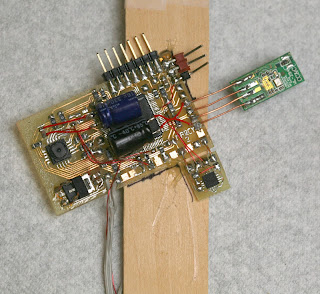

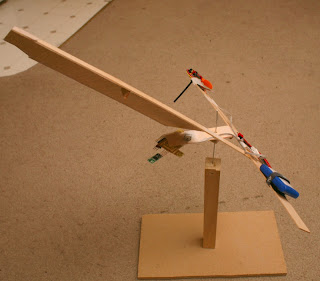

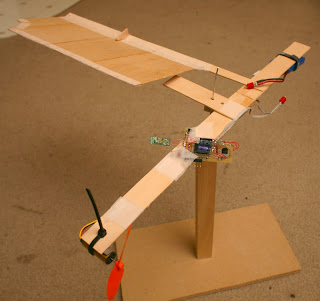

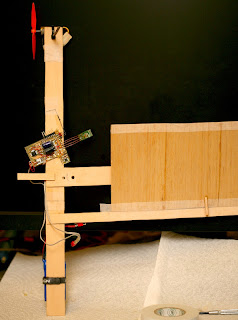

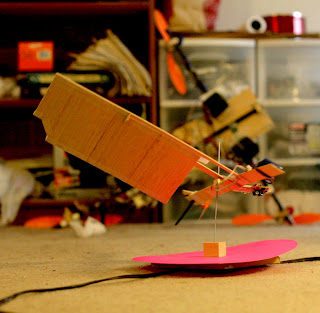

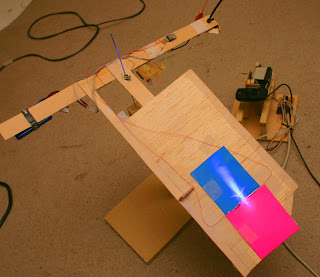

Then came more attempts to get the camera to point down more & withstand crashes. The wifi dongle seems well protected. It needs to be as far from the wood as possible, for any reception.

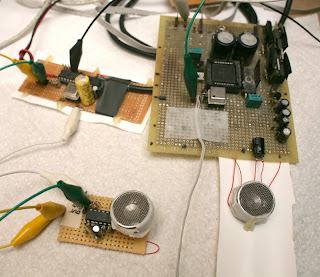

The idea of sonar for altitude & a horizontal camera for position continues to haunt. Exactly how to derive position from the horizontal camera is still unknown. Feature detection is impossible on it.

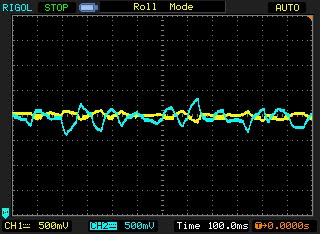

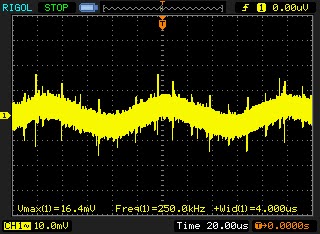

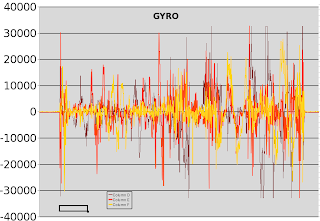

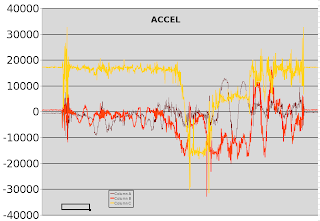

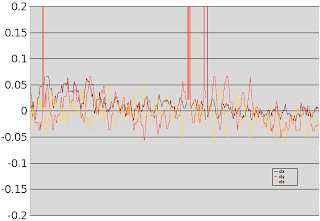

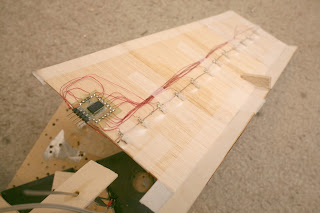

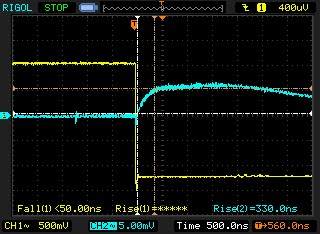

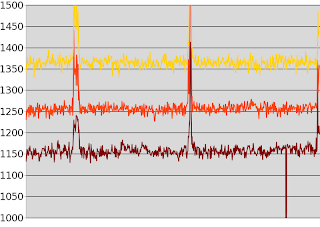

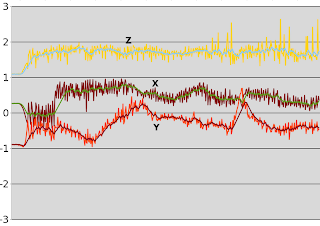

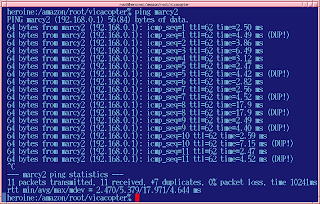

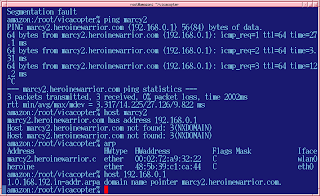

Ground based vision gave Marcy 1 pretty much 10Hz updates. She spun around at 4Hz, but the incomplete revolutions still seemed to have extra data.

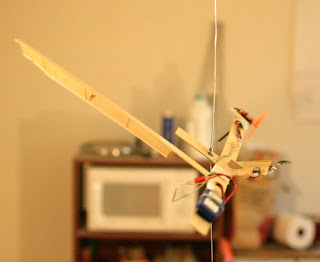

A few more crashes have painted a picture where 1 update per revolution, with many updates glitched out & therefore velocity data based on changing time bases, isn't enough. Marcy 1 needed many high quality updates to work. A fisheye lens pointed straight down seems the only way.

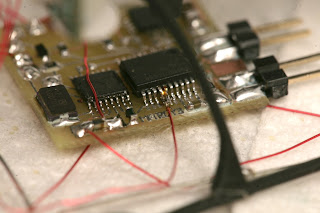

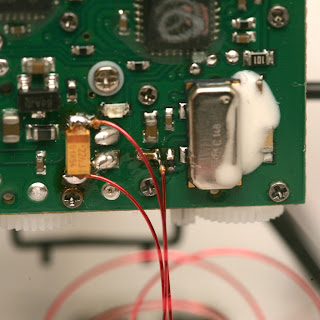

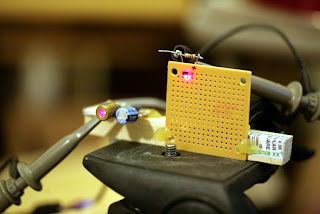

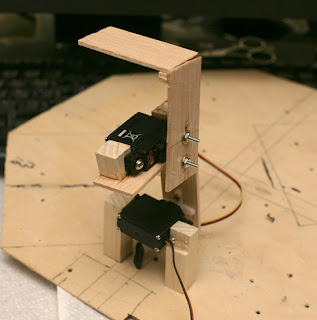

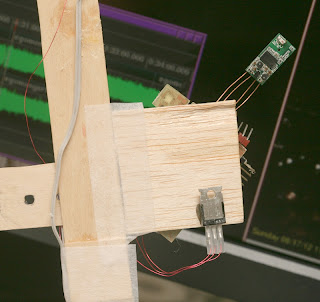

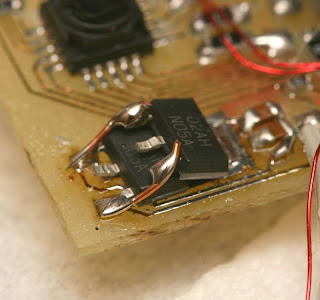

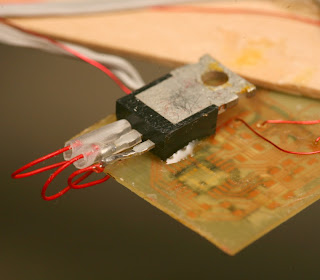

The downward facing camera is slowly rebuilt. A fisheye lens would solve everything. Fish eye lenses can physically be produced, but aren't made in enough quantities to be affordable. So because of a manufacturing technicality, more exotic measures must be tried.

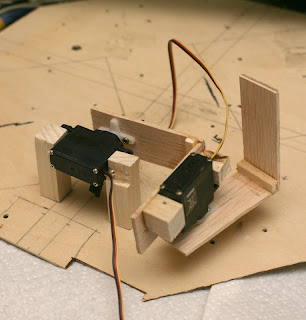

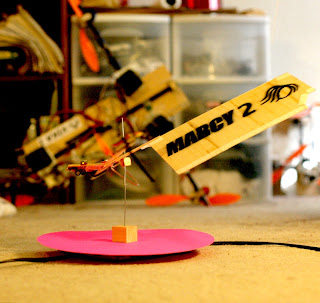

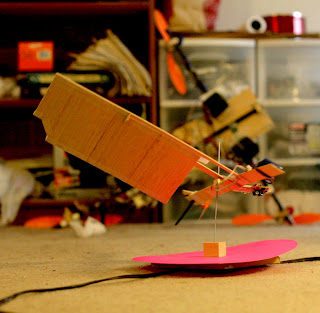

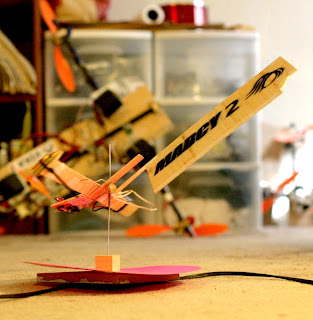

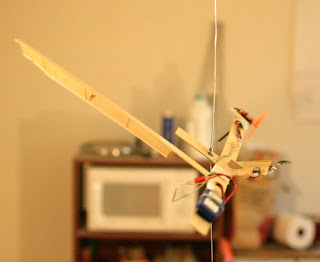

Downward facing attempts continue. This 1 had more in view, but the camera has a small field of view.

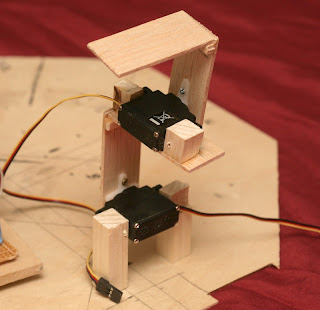

This is quite a heavy attachment, but any payload on it is going to be relatively level for any coning angle. The options are stuffing it to give it the last angle change, moving mass below it to force it up, or making it rigid. All this with a field of view which is too narrow.

Then there's going back to ground based vision, probably feasible with the latest blob detection, but not for any product. Requiring a target on the floor isn't sellable either, but it was a step towards an all in one system.

A horizontal firing camera of higher resolution & downward firing sonar for altitude might do it, with this horizontal platform. The camera would have to detect optical flow of vertical objects.

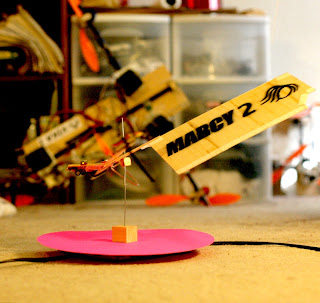

That was as close as anything can be expected to get. At 2 meters in altitude, there are only 2 target widths of horizontal range. It's either this & 25Hz position data or diagonally facing & 4Hz position data.

Any vision system that tracks a single object is going to need a gimballed camera. That's why, even with modern object detecting algorithms, aircraft still only use optical flow.

So aircraft video over wifi was heroic, but only showed it's impractical for navigation. Pointing sideways, the detail isn't enough to detect motion. Pointing down, either the field of view is too small or the update rate is too slow. Even though the rotation rate always created an update rate of 4hz, there was more information in the incomplete rotations.

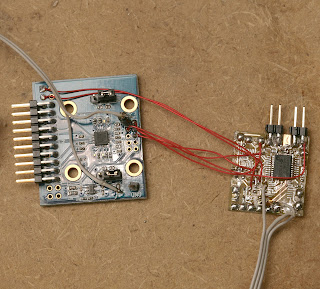

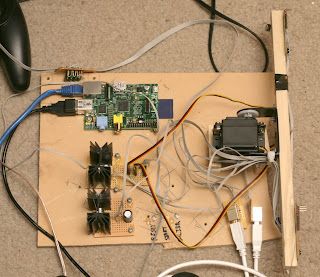

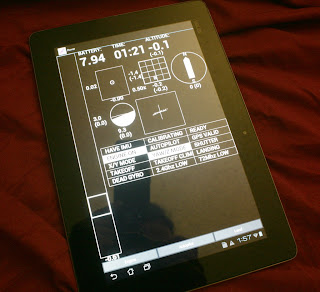

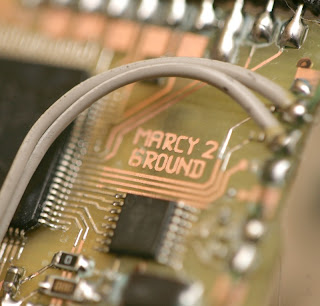

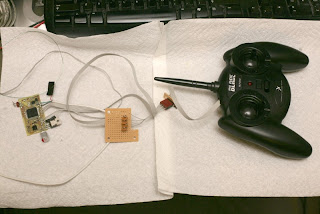

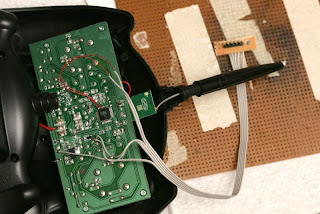

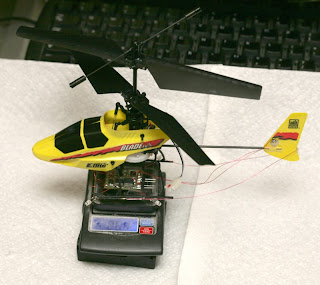

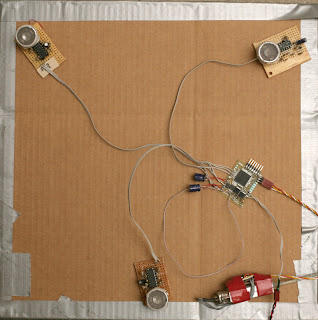

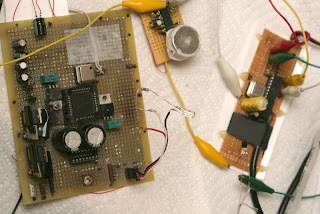

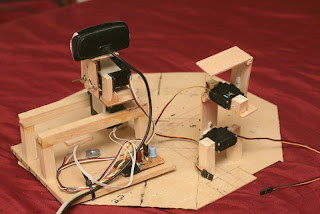

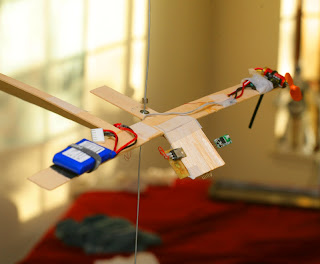

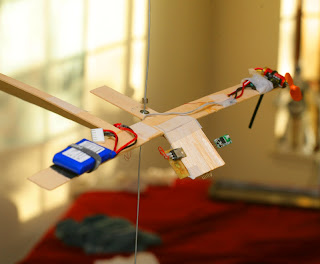

Marcy 2 needs a takeoff platform, so all roads lead to a vertical facing camera pointing up from the takeoff platform. It's connected by hard USB. A 2nd camera on the aircraft points sideways & sends video by wifi. As a simple 4fps video downlink, it would be better done over bluetooth.

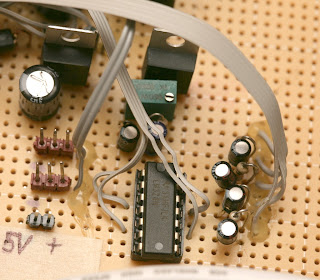

It's a lot of wires to connect. It's not an all in one system, but having both cameras on the aircraft still required a ground target which was bulkier than a ground camera. Altitude information pointing straight up is not as accurate as pointing sideways.

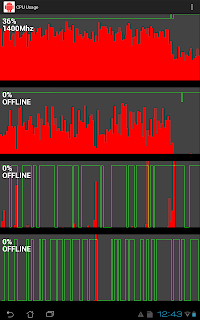

6:43

6:43

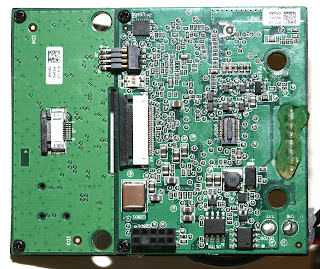

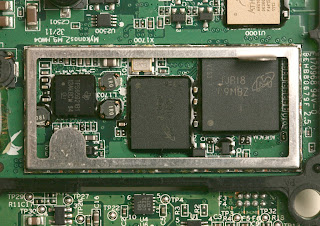

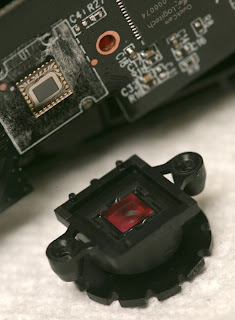

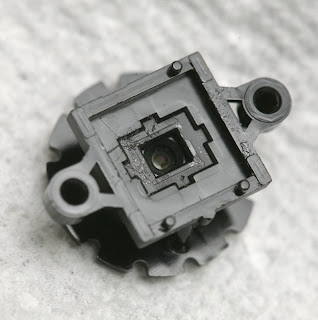

There's exactly 1 teardown of a gopro hero 3, revealing the wide angle lens

is a box screwed down over the sensor, like a webcam.

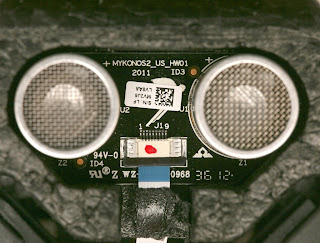

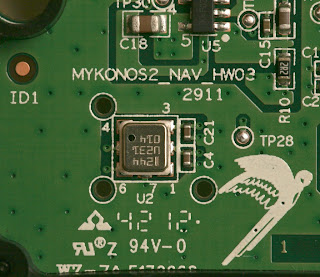

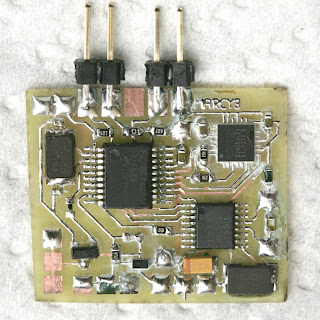

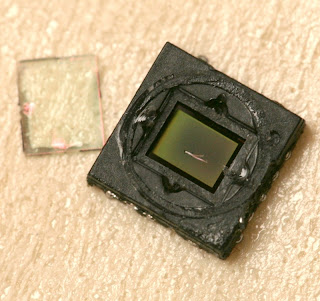

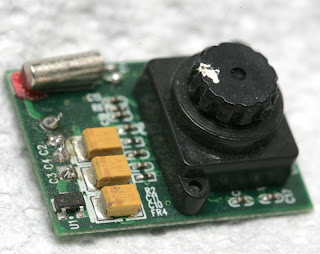

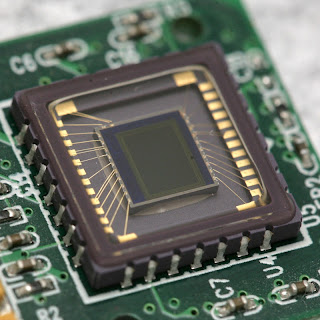

Another teardown of this thing

revealed a compound lens, like the TCM8230MD.

No part number.

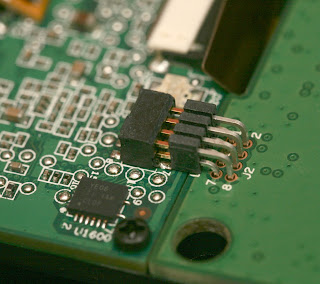

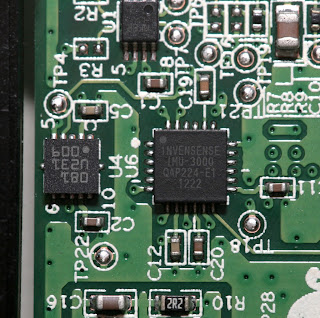

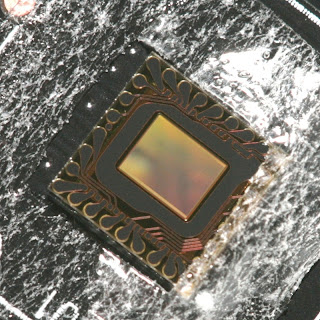

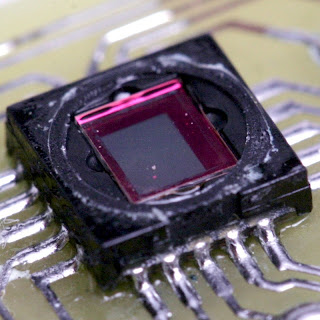

Another look at the omnivision sensor.

So the keyword that gets lenses to show up is "board camera lens". Wide angles don't become possible until the sensor is 1/3".

http://www.vitekcctv.com

is the only supplier. The keyword used to look up cheap, zoomless, fixed focus cams like the gopro is "board camera."

A $30 wide angle adapter gets it wider.

Finally, from goo tube wide angle lens lore, we have the peephole.

It has 2 lens elements. It splits in 2.

revealing a useless image.

The front element can be ground off.

Revealing no image.

Forget about extracting the 2 lens elements & making a custom tube. Even when you're done grinding, they're glued in there.

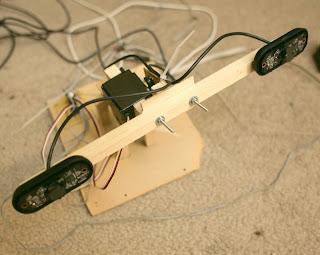

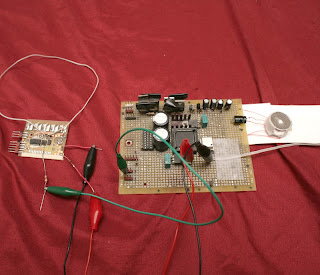

Position sensing from the launch pad is the new ideal. Attitude leveling for takeoff would be done by a side firing, onboard camera. The problem of 2 onboard cameras goes away.

Then the problem of position sensing in daylight returns. Should a cable connect to the launch pad? Should the launch pad & aircraft both be on wifi? Should the aircraft use proprietary radio to talk to the launch pad & the launch pad use a cable or wifi? Should the launch pad use a stock webcam with a USB multiplexer or a custom cam? What kind of servos should it use?

There's docking a phone to the launch pad to control it & having another remote control, like the Swivl. There's doing all the navigation processing on the launch pad, having a proprietary radio from the launch pad to the aircraft & wifi from the aircraft to the phone.

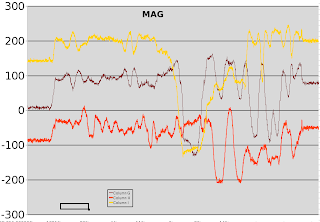

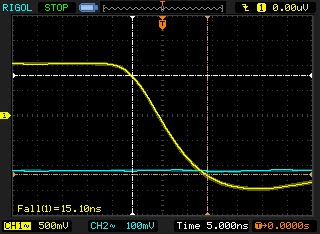

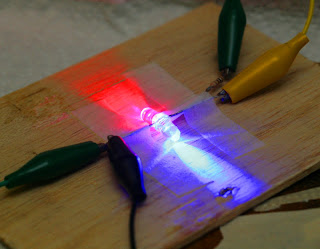

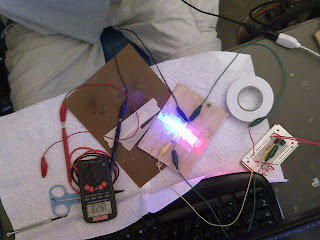

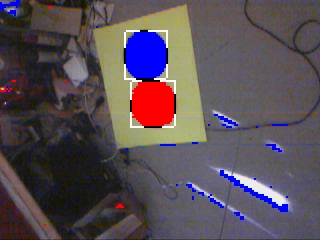

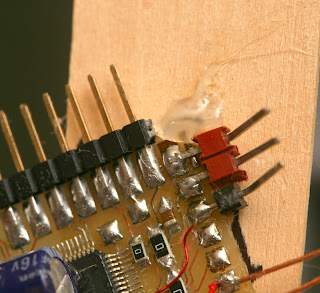

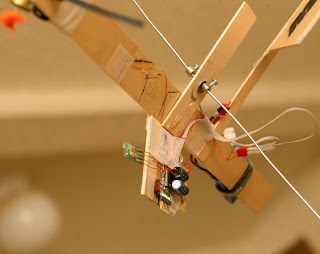

A few hours reinstalling LED markers returned the reality of colored LEDs saturating the sensor to white. Then came the realization that Vicon uses reflective markers to keep the sensor from saturating white. To illuminate the markers, it has a very expensive & labor intensive ring of IR LEDs.

An attempt to light the markers with camera LEDs failed. Obviously the Vicon cameras can't sense anything too close. A dreaded infrared camera with ring of LEDs draws nearer.

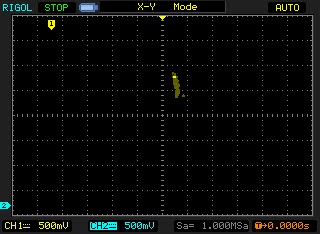

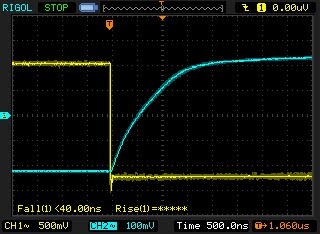

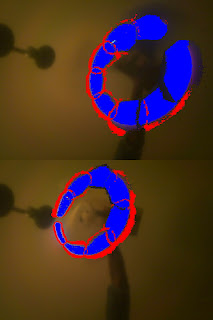

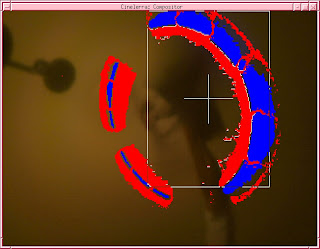

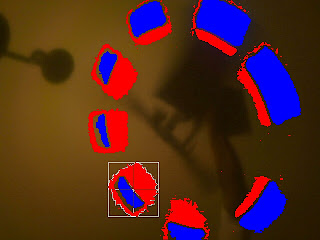

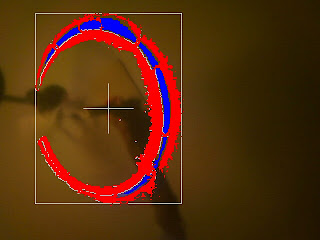

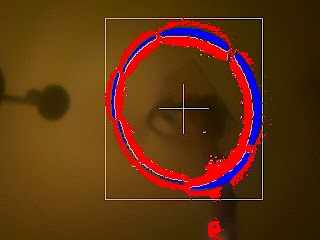

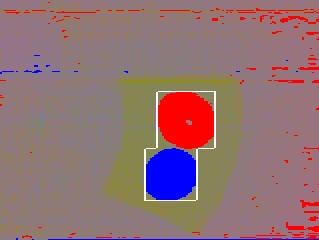

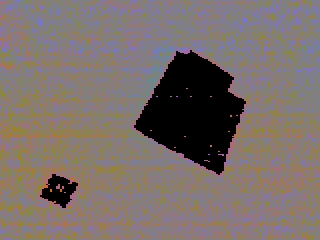

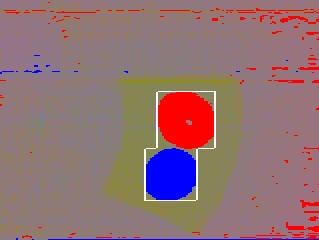

The LED rearranging yielded this.

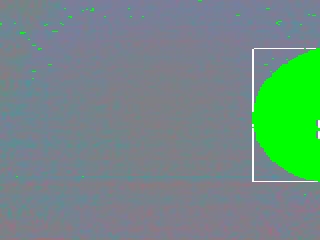

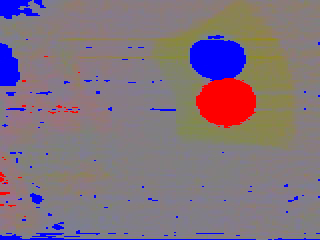

Which the computer sees as

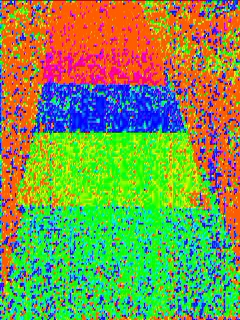

or

depending on camera exposure. It's hard to see a future in the webcam business.

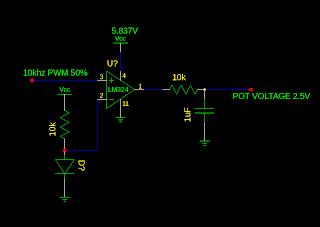

With minimum gain on the webcam & some chroma keying, an algorithm starts to emerge, where the intersections of red, white, & blue are plotted & averaged to give a very accurate location of the marker.

Let's get clear that I did dream about trying to get into Aerovironment. It turned into the hippy colony from Martha Marcy May Marlene. The creepy skinny guy was the boss. He seemed normal, then turned into a crazy preacher of some kind, conjuring up super powers. A crazy dog came out from a wall. Then there were crazy zombies which mutated into other forms of life. I didn't care & still wanted the job, hoping the craziness didn't happen during business hours.

Anyways, the point is 1 of the mane drivers of open source work is the saying "If I can't have a job, no-one can." You may not realize it, but the simplest answer is always right.

You think a college student in Finland, a country like US, with total unemployment in 1990, wasn't at least partly driven by vengence because he once sent a resume to work on Minix & got rejected? I say vengence over denied opportunities has always been 99% of the motivation behind free software, even more than fame or learning.

It has always been the case that the most valuable work is not what anyone is hiring for. In 2000, the most valuable work was in video software, yet all the jobs were in e-commerce software. They specifically wanted to see e-commerce on the resume or you were out.

A lot of college students then wrote free video editors. Our motto was if we couldn't get jobs doing it, we'd put everyone out of business who did. Write the software we did & out of business the corporations went.

It feels the same way, today. All the money seems to be in physical computing that combines software & hardware. It's obvious that you can't make money selling pure software, yet all the companies are still in this 20 year old model of dividing jobs into specifically software or specifically hardware. If you're not specifically doing 1 thing, you're out.

So the mission has been if we can't make money because we don't fit the cookie cutter model of pure software engineers with exactly the right 8 year degree in reverse polish turkey notation, produce all the stuff for free until no-one can make money.

The job market today isn't just bad, the people who are getting jobs are clueless. It's 1 of those times when it's so bad, people with jobs are more afraid of getting pushed out than increasing production, so they're only hiring lower levels of talent. The incompetent workforce is begetting greater incompetence.

If you've got 100 million people whose cost of producing anything is $0 & who are being denied opportunities not because of talent but simply because of fear, 0 is going to be the fair market value for anything produced. No business will be left standing.