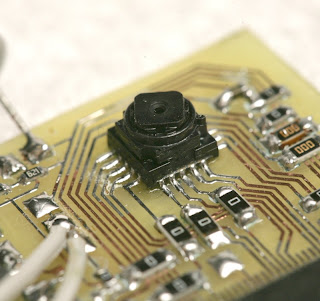

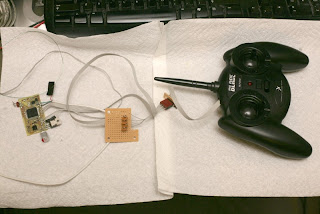

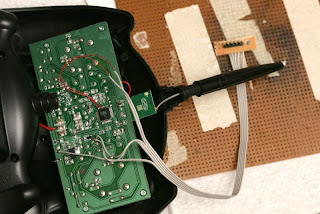

When the ublox 6 series modules were released, I noted that the 6T and 6P modules provided access to the raw carrier phase measurements, and I started thinking about how to use this capability. My connections through my university research appointment weren't strong enough to get ublox to sample a dev kit to me (they generally don't sample them at all). Jordi and Chris from 3DRobotics.com were kind enough to use their customer relationship with ublox to get a sample 6T module and provided it to me mounted on the standard DIYDrones ublox LEA-6 module. So mine looks just like the one above, except it has a 6T module instead of a 6H.

My prime curiosity was how good the carrier phase measurements from this module were. The datasheet indicates that carrier phase measurements are available, but gives no specs about their accuracy. So I had no idea if they would be of value. Well, with a module in hand, some measurements made, and post-processing completed, I can tell you that there is tremendous potential here, but a lot of work before this is something ready for everyday use.

OK, what is carrier phase and why do I care?

A carrier phase measurement is a measurement of the phase of the arriving carrier wave signal from the gps satellite. Think of it this way - as the radio signal from the GPS satellite travels from the satellite to your receiver it completes some fractional number of cycles of oscillation. That means that the distance from the satellite receiver to you is some fractional number of wavelengths - say 100 million and 1/2 wavelengths. The carrier phase measurement gives you the fractional value of 1/2 wavelength, or 180 degrees of phase. That in itself is almost useless. Fortunately the gps module gives us something a little better than that. What it reports is the phase change (or the number of wavelengths of distance change) between the satellite and the receiver from one measurement epoch to the next. So what we really have is a measurement of the change in distance between the satellite and the receiver over a known period of time. And so theoretically, given that we know something about the location and velocity of all the satellites we should be able to use this information to calculate the (3D) velocity of the receiver. Just one more thought on this topic - we could do the same thing with pseudo-range or Doppler measurements, which the receiver also makes, but carrier phase measurements have the potential to be orders of magnitude more accurate.

Nice, but what about position?

Well, the technique that I will describe below has some applicability to making very accurate position measurements, but lets just say it is really hard and is the kind of thing that only happens (still a relatively small number of) military gps with very large price tags. I'll say some more about this later. For now, we will just be looking at making velocity measurements.

OK, but what will we do with a great velocity measurement?

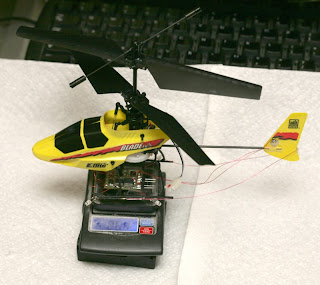

Well, imagine how much better position hold could work in a copter if the autopilot knew its 3D velocity vector to an accuracy of 5 millimeters per second. Yeah, time for a double-take. I said millimeters per second. My initial test yielded a velocity resolution of 1 millimeter per second and an accuracy of better than 5 millimeters per second at a 5 Hz update rate.

There are lots of other things we can do with a better velocity estimate, like better acceleration modelling in the AHRS system, etc.

Let's see the data.

OK. I have run two tests so far. The first turned out fantastic. The second had some difficulties, but still shows immense promise. Below is a plot of the velocity estimate error during the first test. This represents a bit over 5 minutes of data with samples at 5 Hz.

OK - let me describe a few things in this plot. First, note that there are 5 `spikes' where the error is above 0.1 m/s. These are 5 samples where the receiver did not have carrier phase lock on at least 4 satellites, and you need 4 satellites to get a valid solution. For individual samples like this you can generally re-use the last velocity estimate. There are also around 10 samples where the error is on the order of 4 or 5 cm/s. These are samples where the receiver only had 4 satellites with carrier phase lock. The accuracy gets better when the receiver has more satellites with carrier phase lock.

You may be wondering what my "truth data" was. Well, it was simply that the module was just sitting on the ground in my back yard, so the truth was 0 velocity in the Earth centered Earth fixed (ECEF, WGS84 for example) frame. This is not at all cheating as the velocity relative to the ground is irrelevant - we are still making a velocity estimate based on measurements of the velocity between the satellites and the receiver, and the satellites are all moving at roughly 14,000 km/hour in various directions. The relative velocities between the satellites and the receiver are on the order of thousands of kilometers per hour, and from this we are able to deduce motion on the millimeter per second scale.

Unfortunately maintaining carrier phase lock is not as easy as tracking a satellite. On the second test in similar conditions I only got carrier phase lock on 4+ satellites about 75% of the time, leaving many periods of several seconds with no valid measurements available. On the second test the reduced accuracy of the velocity measurement was more apparent with accuracy on the order of 0.1 m/s with 4 satellites and 0.02 m/s with 5 satellites. Even so, 0.02 m/s is a very impressive number.

How did you do it?

I did all this with data recorded using ublox UCenter and post processing using MATLab. There is no reason that C code cannot be developed to do this in real time, although it probably requires too much processing power to add to an 8 bit autpilot. Fortunately 32 bit hobbyist autopilots are becoming reality. My method was really a reflection on my lack of time for this sort of project at present. So, here is what I did:

- I used the 6T module to collect raw carrier phase measurement data. Tridge supplied a Python script to convert the ublox formatted file to a human readable file. I did some further formatting on it and imported it into MATLab as a data structure.

- I used the GPS broadcast Ephemeris to compute the position of all the GPS satellites for which there were measurements at each time epoch, and translated these positions into the ECEF coordinate frame.

- I differenced the computed ranges between adjacent epochs and set up a linearized least squares estimate of the receiver velocity using unit vectors in the directions from the receiver to the various satellites as a weighting matrix. The final estimate can be translated into the ENU or NED navigation frame for use.

I thought that approach would work great, but it failed completely, and it took me a while to figure out why. The reason is that although the receiver clock bias is not needed in a velocity solution like it is in a position least squares solution, the receiver clock drift rate is not insignificant for inexpensive modules like ublox and it completely messes up the velocity estimate if it is not accounted for in the sample to sample carrier phase change. Simply put, the receiver clock is not only running at a somewhat incorrect frequency (the clock bias - which is an error in the pseudo-range measurements) but its frequency is moving around and the rate of change of the frequency shows up as a velocity error in the carrier phase measurements. So, I modified my least squares estimation to estimate both the 3D velocity vector and the drift rate of the receiver clock. This worked great.

Work left to do

Several things need to happen to move this forward. First, I need to investigate how big a problem exists with the module not being able to maintain carrier phase lock on enough satellites and what conditions make that better/worse. Second, the issues with running this in real time on a drone need to be hashed out. I don't really know how processor intensive this code will be when optimized, but likely it will need to wait for the 32 bit processors as it is fairly math intensive. Finally code needs to be developed as I did this all with MATLab and borrowed a lot of proprietary scripts from research associates…

A few more things if you are interested - Cycle slip, Integer ambiguities...

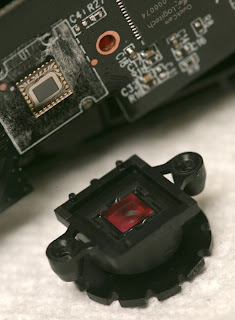

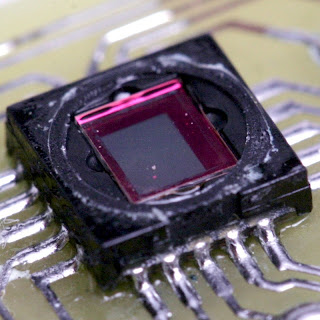

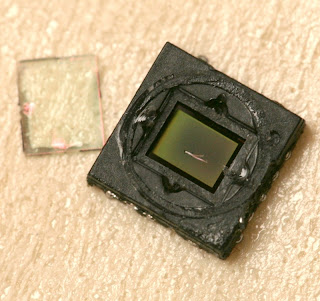

The reason that it is difficult to use carrier phase measurements in a position solution is because of the "Integer Ambiguity". The Integer Ambiguity is the unknown number of full cycles of the carrier wave between the satellite and the receiver. See the picture below.

Here we see a satellite in motion and the phase measurement at two times. The carrier phase measurement gives us the number of "Counted Cycles", but we have no idea how many cycles are in the Ambuguity. There are techniques available to resolve the ambiguity, but generally these take a very large amount of processing power, take a long time, and are difficult to implement for platforms with dynamic motion. We can maintain a sum of the Counted Cycles and do a variety of things with it. This is one method used in RTK in a lot of agricultural applications, etc. But every time we loose carrier phase lock we have to discard the sum and start over. This is termed cycle slip.

I mentioned that gps receivers have a harder time maintaining carrier phase lock than just tracking a satellite. The tracking loops in a gps receiver are fairly complicated. There are simultaneous loops tracking both the code delay and Doppler frequency for both the in-phase and quadrature channels. These loops use a power measurement from the combined I and Q channels in their discriminator to maintain lock in these loops. However the carrier phase lock generally has to rely on a single channel and has other challenges. This is too bad because it is commonplace for the receiver to be able to track between 8 and 10 satellites in an open sky. However, my 2nd test showed as poor as only 75% of the time with 4+ satellites maintaining carrier phase lock. This will be the critical factor to investigate to move forward.

The California Near Space Project is at it again. Last year, they became the first team to achieve a transatlantic crossing using a latex weather balloon, and they launched from California. Above is last year's flight path.

The California Near Space Project is at it again. Last year, they became the first team to achieve a transatlantic crossing using a latex weather balloon, and they launched from California. Above is last year's flight path.